Nowadays many companies are making a considerable effort to guarantee the constant sharing of information, knowledge and expertise across their organizations. It comes as no surprise that Information Technology plays a key role in responding to this challenge.

A very popular IT solution for organizing enterprise information is the use of company portals. From an IT professional perspective, portals combine enterprise data and applications that reside on the company intranet into a tight infrastructure. From a business perspective, portals provide users with easy access to data and applications through personalized views.

Business Intelligence is also an important part of this discussion for companies that want to use their intranet portal to deliver reports and dashboards to end users. For example, one of our customers has recently asked us to investigate the integration capabilities of Business Objects XI R3 with the leading portal-solution on the market, Microsoft SharePoint.

In this post I will introduce the topic of integration capabilities and benefits. Due to its complexity, this topic also promises to be an interesting focus for future blog articles and continuous technical investigation.

Why Integrate BusinessObjects with SharePoint?

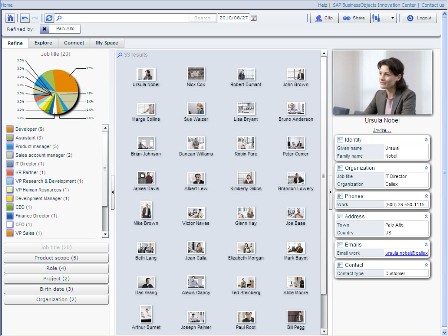

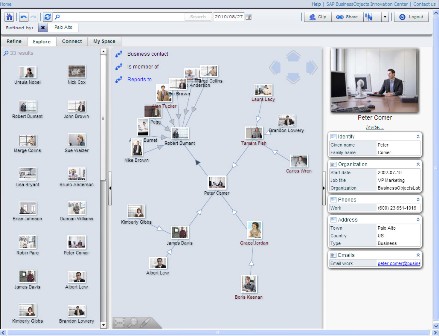

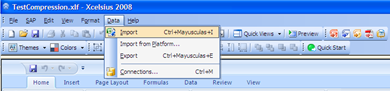

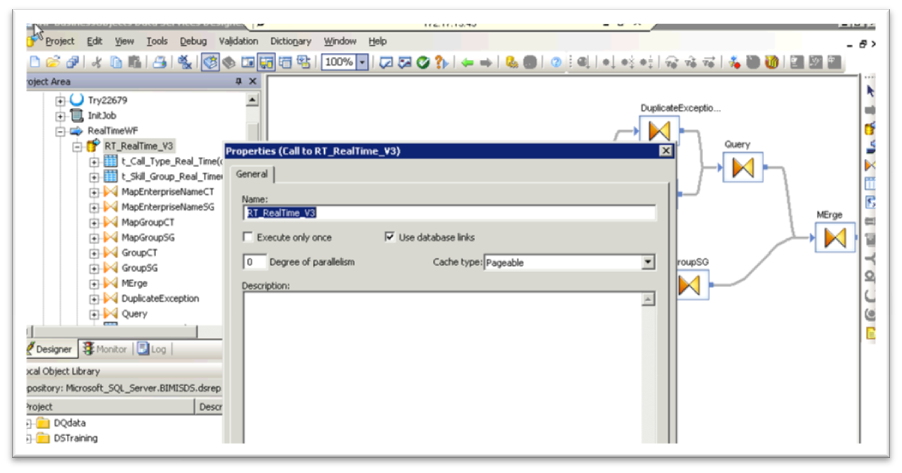

Integrating BusinessObjects with SharePoint allows users to view and manage information within a Single Portal Interface. This integration grants access to all business intelligence resources such as interactive Xcelsius Dashboards, Crystal Reports, Web Intelligence, and any Microsoft Office documents that are stored in the CMS Repository. Therefore, users can navigate BusinessObjects personal/favorites folders, public folders, inbox, as well as personal categories and corporate categories within SharePoint.

The clear advantages for business users are:

- A unique and easy point of access to any kind of BI content (but not exclusively BI), compared to InfoView, which is a powerful tool but still represents an additional and restricted interface.

- A simplified and more efficient use of software licenses, eliminating redundant licenses for those users that are typically viewers of BI content with no need to edit reports. In this case SharePoint allows them to view and refresh Crystal or WebI reports from within the portal.

From a System Administrator point of view, integration with SharePoint offers the possibility of reducing duplication in the backend infrastructure and consolidating BI tools with other technologies in one enterprise portal with a single security model.

While the benefits of such an implementation are clear, I found that there is still some uncertainty surrounding the available options for deployment. Taking into account new and old releases, several different versions of BusinessObjects and SharePoint are being used within organizations today. In the past few years SAP has released multiple versions of the “SharePoint Portal Integration Kit” for BusinessObjects, and both XI R2 and XI R3 can be matched with SharePoint 2003 or 2007.

Both BusinessObjects Enterprise and Edge customers are entitled to download the integration kit from the SAP developers’ portal. Moreover, although it’s not specifically advertised by SAP, there is evidence of successful implementations of BusinessObjects XI 3.1 with Java InfoView interfaces on www.forumtopics.com/busobj. This suggests that even companies that are not using a .NET interface will be able to exploit the integration capabilities of BusinessObjects with the portal.

However, a .NET interface seems to be the ideal condition for achieving full integration with the Microsoft platform. In fact, the integration kits for Microsoft SharePoint 2007 and Microsoft SharePoint 2003 have gained acceptance in the marketplace but have limited capabilities. As a result, SAP recently released an additional product, with the intention of providing all of the .NET InfoView capabilities from within the SharePoint interface. The product has been released with the name of "Integration Option for Microsoft SharePoint software 1.0".

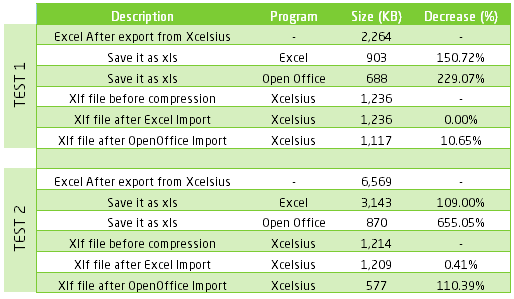

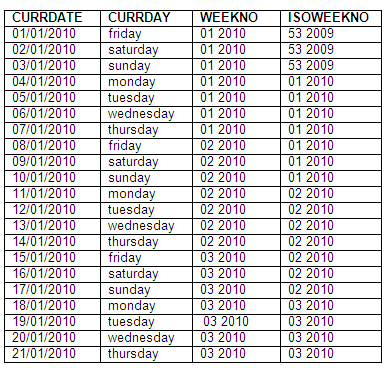

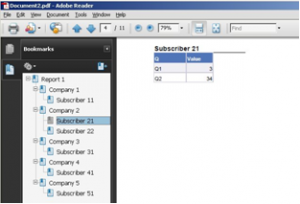

The following table, from an official SAP source, clearly shows how superior this option is compared to the previous integration kits:

Further information on the available SharePoint integration kits can be found on www.sdn.sap.com. Integrations kits are free of charge and all direct zip file downloads are available here.

On a final note, it is worth speculating on what is to come in the near future. Regrettably, the current versions of the PIK and the IOMS do not provide an option for integration with SharePoint 2007 64bit. All existing products integrate with 32bit versions of SharePoint. But not to worry; with the recent launch of SharePoint 2010, available in 64bit mode only, SAP is catching up with an ad hoc version of the PIK. According to rumors on the SAP developers’ forums, the 64bit integration kit will be released by mid 2011, following the launch of BusinessObjects XI R4 later this year.

Will this be the chance to improve the integration capabilities with Java InfoView interfaces… We can’t say just yet. But stay tuned for updates on this topic and for more technical insights in future blog articles.