SAP BI Services - Part 1

During the last couple of years I have been part of a team developing quite a few SAB BusinessObjects Dashboards (Xcelsius). In order to develop them, one of the key requirements is to have a consistent data source. Historically we have used all sorts of techniques that SAP BusinessObjects has made available, from QAAWS to Live Office. Each method has its pros and cons and there are situations on which using one over another makes sense.

On this article I will focus on the last method I have discovered: BI Services.

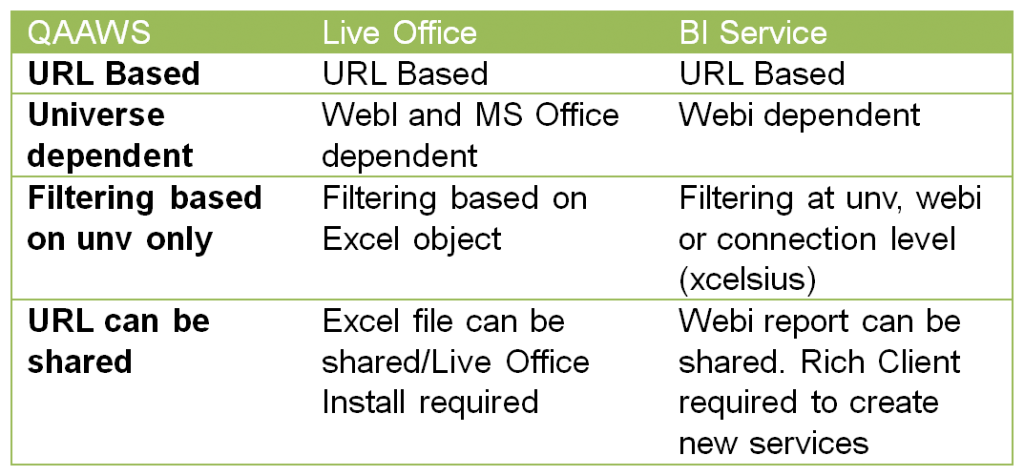

Comparing the three methods most frequently used, the following table shows their common characteristics as well as differences:

The main advantages I see on using the BI services method are:

- Using W as an aggregation engine

- Robust Query/Block organization

- Enterprise re-usability

- Filter capabilities at SAP BusinessObjects Dashboards (Xcelsius) connector level

- Additional Metadata

- Performance increase by using Webi servers engine/cache

Basic Requirements

Now let’s talk about how we can implement this solution in our Business environment.

As indicated above the BI Service is dependent on a WebI document. This means that it gets its data from a WebI report block. Also note that it is only possible to use this functionality if you have the WebI Rich Client application installed and you have enterprise permissions on the application. For our particular example we are using a client tools installation with version 3.1 SP 2.4.

Configuring the BI Service CMS Server

If you meet the two above requirements then let’s go ahead an open the WebI report that contains the block that will serve as the data source for our BI service.

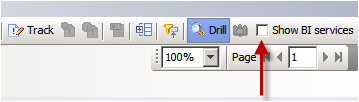

Once the report is open you will see the on the top right of the WebI Rich client application.

We need to mark the check box to display the BI Services panel. The following panel will appear on the right side of the application:

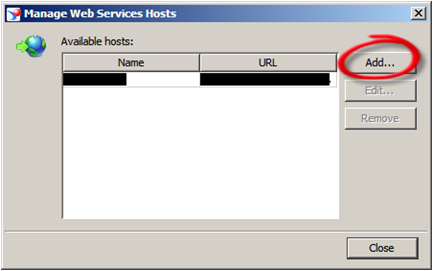

Now we need to configure the CMS server we will be accessing by clicking on the manage servers button.

Click on Add and enter the details corresponding to your SAP BusinessObjects system. The data input parameters are: CMS name and dsws URL (which should be something like this http://yourserver:port/dsws)

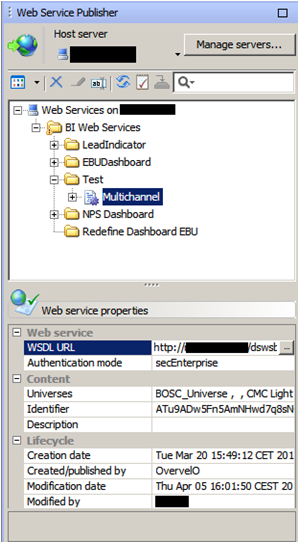

Once the server has been configured, select it from the list and click on the refresh button so all BI Web Services available are displayed.

A list of BI Web Services will appear if any exist in the system. The BI Web Services can be organized in folders and each BI Web Services can have 1 or more blocks of data. We will talk more about these organization structures later on this blog.

You can see the 3 levels of organization in the screenshot below:

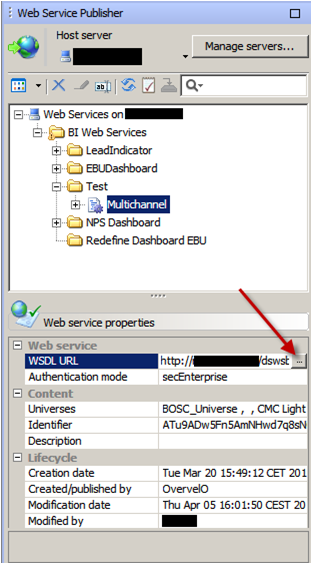

In order to consume from one of the BI Services available you will need to use the WSDL url which is one of the properties that appear when you select the block you wish to consume from the list.

Click on the three dots button on the right so you can see the BI Service definition.

The BI service description should look similar to the screenshot below.

NOTE: If you can’t see a page similar to the one below, then the BI service URL is not accessible from the machine you are querying from and that might cause trouble later on.

Click on the wsdl link.

Creating a new BI Service

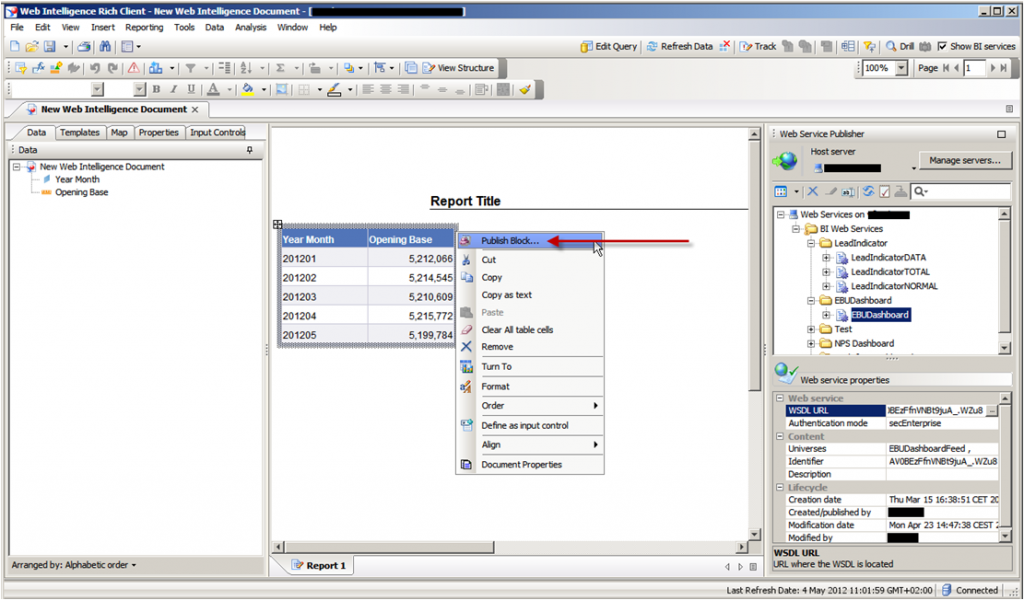

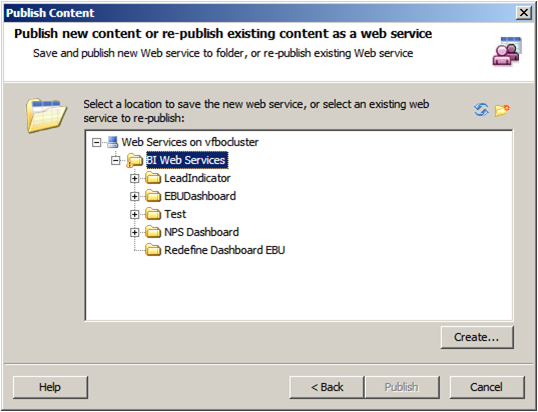

Select the block from the WebI report and right click on it. A context menu will appear with its first value being “Publish block”. Select this option.

In some cases the below warning might appear. If the WebI report you are using as a source is not published in the SAP BusinessObjects enterprise repository then you can’t create a new service.

This is the initial screen for the BI service publishing wizard:

You need to, define a name for the block. The block is the smallest piece of organization for the BI service and it is equivalent to the table you have selected as a data source.

The block can be part of an existing BI service or you can create a new one. Each BI Service can contain one or many blocks. Also the BI Service can be organized in folders. All of these selections are done at the following screen:

For publishing a block on an existing BI service just select the BI service and press the Publish button.

To create a new service, select the folder and click on the Create button below. To create a new folder click on the New Folder button on the upper right side.

So now that your BI service has been created it is time to start consuming it with SAP BusinessObjects Dashboards (Xcelsius).

In the next part of this blog we will talk about SAP BusinessObjects Dashboards (Xcelsius) connectivity to BI services and what we can do to manipulate our new data source.

If you have any questions or tips, leave a comment below.

Implementing SAP Rapid Marts Xi 3.2: Lessons Learned

I would like to share with you two lessons learned about the implementation of SAP Rapid Marts XI 3.2, version for SAP Solutions. In our particular case the customer didn´t allow us make any modification to the out-of-the-box solution, and this must be taken into account when reading the article.

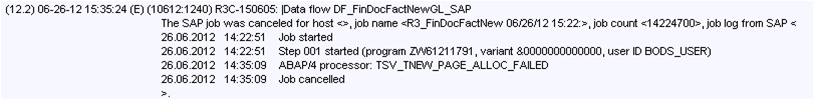

Lesson 1: Don´t be scared by the TSV_TNEW_PAGE_ALLOC_FAILED Error

If part of your implementation of SAP Rapid Marts includes General Ledger, Inventory or Cost Center you will probably have to perform a massive extraction of information from SAP ERP during your initial load. Most probably your customer will have been working with their ERP for quite some time, and you are likely to face the following error:

At this point, you will possibly start looking at configuration of SAP Data Services or you will start trying to tune the SAP ERP configuration in order to achieve the end-to-end execution. In general terms, you will have a headache trying to solve this problem and most likely none of the configurations will work…

The truth is out there… After many hours trying to tune configurations you will understand that the only solution is to modify the ABAP extraction itself… BAD NEWS: your customer does not allow you to do this because this means altering the out-of-the-box product. GOOD NEWS: the guys from SAP Support already tackled this problem and they published the following SAP Note 1446203 - Multiple Query Transforms in ABAP Data Flows, there they clearly explain that your conclusion is correct: this error is a memory allocation error on the SAP solutions server, it indicates that the SAP solutions server has run out of memory for the SAP Data Services generated ABAP program.

You will find an attachment in the SAP Note with some ATLs where they basically tune all the ABAP extractions of the SAP Rapid Marts XI 3.2 package, after applying this ATL to the out-of-the-box solution your extraction will work like a charm.

Lesson 2: How to improve performance of Delta Load for FINANCIAL_DOCUMENT_FACT

This lesson is useful to you if SAP Rapid Mart XI 3.2 General Ledger is part of your implementation.

After finishing the initial load of this SAP Rapid Mart you will start running the delta load. At this moment you may be shocked by the bad performance. Depending of your requirement this delta load can be something simply not affordable.

In basic terms this delta load tries to rebuild all the information of the current fiscal year. We ran an extensive performance analysis and the conclusion was clear: the logic for the processing of delta loads to the table FINANCIAL_DOCUMENT_FACT was causing serious performance issues to our environment.

Only one possible solution was in sight: introduce a new logic for the processing of delta loads to the table FINANCIAL_DOCUMENT_FACT. Breathe deeply because indeed this means to re-invent the SAP solution and this can clearly jeopardize your project.

We decided to come back with this topic to the SAP Support team looking for a “magical solution” and they got it! One more time there was a solution on the SAP Note 1557975 - Poor Performance of Delta Load for FINANCIAL_DOCUMENT_FACT.

The SAP note clearly defines a scenario like ours and provides an ATL file to tune the delta load for the FINANCIAL_DOCUMENT_FACT. Again, after applying the solution provided the delta load worked perfectly.

As conclusion, I would like to mention that after many years working with different support teams I´m impressed with the capability and escalation levels in the SAP Support team. Like many other support teams you may have to push to get a solution but I don´t know many other support teams that can escalate your request up to have a discussion with the director of development of a product or provide you with solutions that fit perfectly to your environments.

That´s all folks! I hope these two tips help you to speed up your SAP Rapid Marts implementations. If you have any doubts please leave a comment below.

Tips for installing SAP BusinessObjects BI4 in Spanish

The Spanish installation of SAP BusinessObjects BI4 is said to frequently crash, and even when it does not, something is still wrong in the server side so SAP BusinessObjects installation administration console does not work as it should and certain formulas used in the front-end side malfunction. This article explains some tricks that can be applied when installing SAP BusinessObjects in Spanish so customers can successfully migrate to the new SAP BO BI4. Folders Security Configuration

When configuring the security for a folder in the SAP BusinessObjects administration console, an issue appears. This can be easily reproduced by installing the software in Spanish, proceeding to enter in CMC and start configuring security. The following error can be seen when entering in Folders Top Level Folder Security:

"A server error occurred during security batch commit: Request 0 of type 44 failed with server error : Plugin Manager error: Unable to locate the requested plugin CrystalEnterprise.ScopeBatch on the server. (FWB 00006) "

There is no other solution rather than to change the Regional Settings of the server from Spanish (Spain) to English (United Kingdom), then this issue is solved.

UserResponse Formula Use

An issue occurs in WebIntelligence when Preferred Viewing Locale is Spanish and Users refresh reports Prompts: A numeric prompt is interpreted as a text with a wrong scientific format when the =UserResponse() formula is used.

The issue can be reproduced taking one object from the official SAP “STS Southeast Demo” Universe, retrieving one object with a prompt and applying the UserResponse() formula as indicated below

In the example stated above the UserResponse() formula should be evaluated to “201010” instead of “2.0101e5”. This can cause serious issues as it is a change Vs. previous software versions because this could be included in many formulas and filters, and could cause a migration project with reports using this frequently used formula to be dramatically extended.

A workaround for this is, for the time being, to keep the Preferred Viewing Locale in English for every user who refreshes information.

So, summarizing, the pieces of advice proposed for a successful installation in Spanish language are:

- Keep your server with Regional Settings of the server in English (United Kingdom)

- Configure your BI LaunchPad users to keep the Preferred Viewing Locale in English

- Keep the BI LaunchPad users Product Locale property setting to Spanish (Spain)

As a result, users will visualise the whole front-end in Spanish language with no errors.

This solution has been tested in latest 4.0 SP4 which includes FeaturePack 3.

The benefit of these workarounds is to provide the market of Spanish language customers with the ability to start migrating to the new SAP BI4 platform with success, and enjoy the product in Spanish language with no bugs. Hope this helps. If you wish to leave your comment or opinion, please feel free to do so below!

Enterprise mobility for joined-up decision making and greater productivity

B-tree vs Bitmap indexes: Consequences of Indexing - Indexing Strategy for your Oracle Data Warehouse Part 2

On my previous blog post B-tree vs Bitmap indexes - Indexing Strategy for your Oracle Data Warehouse I answered two questions related to Indexing: Which kind of indexes can we use and on which tables/fields we should use them. As I promised at the end of my blog, now it´s time to answer the third question: what are the consequences of indexing in terms of time (query time, index build time) and storage?

Consequences in terms of time and storage

To tackle this topic I’ll use a test database with a very simplified star schema: 1 fact table for the General Ledger accounts balances and 4 dimensions - the date, the account, the currency and the branch (like in a bank).

To give an idea of the table size, Fact_General_Ledger has 4,5 million rows, Dim_Date 14 000, Dim_Account 3 000, Dim_Branch and Dim_Currency less than 200.

We’ll suppose here that the users could query the data with filter on the date, branch code, currency code, account code, and the 3 levels of the Balance Sheet hierarchy (DIM_ACCOUNT.LVLx_BS) . We assume that the descriptions are not used in filters, but in results only.

Here is the query we will use as a reference:

Select

d.date_date,

a.account_code,

b.branch_code,

c.currency_code,

f.balance_num

from fact_general_ledger f

join dim_account a on f.account_key = a.account_key

join dim_date d on f.date_key = d.date_key

join dim_branch b on f.branch_key = b.branch_key

join dim_currency c on f.currency_key = c.currency_key

where

a.lvl3_bs = 'Deposits With Banks' and

d.date_date = to_date('16/01/2012', 'DD/MM/YYYY') and

b.branch_code = 1 and

c.currency_code = 'QAR' -- I live in Qatar ;-)

So, what are the results in terms of time and storage?

Some of the conclusions we can draw from this table are:

Using indexes pays off: queries are really faster (about 100 times), whatever the chosen index type is.

Concerning the query time, the index type doesn’t seem to really matter for tables which are not that big. It would probably change for a fact table with 10 billion rows. There seems however to be an advantage to bitmap indexes and especially bitmap join indexes (have a look at the explanation plan cost column).

Storage is clearly in favor of bitmap and bitmap join indexes

Index build time is clearly in favor of b-tree. I’ve not tested the index update time, but the theory says it’s much quicker for b-tree indexes as well.

Ok, I´m convinced to use Indexes. How do I create/maintain one?

The syntax for creating b-tree and bitmap indexes is similar:

Create Bitmap Index Index_Name ON Table_Name(FieldName)

In the case of b-tree indexes, simply remove the word “Bitmap” from the query above.

The syntax for bitmap join indexes is longer but still easy to understand:

create bitmap index ACCOUNT_CODE_BJ

on fact_general_ledger(dim_account.account_code)

from fact_general_ledger,dim_account

where fact_general_ledger.account_key = dim_account.account_key

Note that during your ETL, you’d better drop/disable your bitmap / bitmap join indexes, and re-create/rebuild them afterwards, rather than update them. It is supposed to be quicker (however I’ve not made any tests).

The difference between drop/re-create and disable/rebuild is that when you disable an index, the definition is kept. So you need a single line to rebuild it rather than many lines for the full creation. However the index build times will be similar.

To drop an index: “drop index INDEX_NAME”

To disable an index: “alter index INDEX_NAME unusable”

To rebuild an index: “alter index INDEX_NAME rebuild”

Conclusion

The conclusion is clear: USE INDEXES! When properly used, they can really boost query response times. Think about using them in your ETL as well: making lookups can be much faster with indexes.

If you’d like to go any further I can only recommend that you read the Oracle Data Warehousing Guide. To get it just look for it on the internet (and don’t forget to specify the version of your database – 10.2, 11.1, 11.2, etc.). It’s a quite interesting and complete document.

B-tree vs Bitmap indexes - Indexing Strategy for your Oracle Data Warehouse Part 1

Some time ago we’ve seen how to create and maintain Oracle materialized views in order to improve query performance. But while materialized views are a valuable part of our toolbox, they definitely shouldn’t be our first attempt at improving a query performance. In this post we’re going to talk about something you’ve already heard about and used, but we will take it to the next level: indexes.

Why should you use indexes? Because without them you have to perform a full read on each table. Just think about a phone book: it is indexed by name, so if I ask you to find all the phone numbers of people whose name is Larrouturou, you can do that in less than a minute. However if I ask you to find all the people who have a phone number starting with 66903, you won’t have any choice but reading the whole phone book. I hope you don’t have anything else planned for the next two months or so.

It’s the same thing with database tables: if you look for something in a non-indexed multi-million rows fact table, the corresponding query will take a lot of time (and the typical end user doesn’t like to sit 5 minutes in front of his computer waiting for a report). If you had used indexes, you could have found your result in less than 5 (or 1, or 0.1) seconds.

I’ll answer the following three questions: Which kind of indexes can we use? On which tables/fields shall we use them? What are the consequences in terms of time (query time, index build time) and storage?

Which Kind Of Indexes Can We Use?

Oracle has a lot of index types available (IOT, Cluster, etc.), but I’ll only speak about the three main ones used in data warehouses.

B-tree Indexes

B-tree indexes are mostly used on unique or near-unique columns. They keep a good performance during update/insert/delete operations, and therefore are well adapted to operational environments using third normal form schemas. But they are less frequent in data warehouses, where columns often have a low cardinality. Note that B-tree is the default index type – if you have created an index without specifying anything, then it’s a B-tree index.

Bitmap Indexes

Bitmap indexes are best used on low-cardinality columns, and can then offer significant savings in terms of space as well as very good query performance. They are most effective on queries that contain multiple conditions in the WHERE clause.

Note that bitmap indexes are particularly slow to update.

Bitmap Join Indexes

A bitmap join index is a bitmap index for the join between tables (2 or more). It stores the result of the joins, and therefore can offer great performances on pre-defined joins. It is specially adapted to star schema environments.

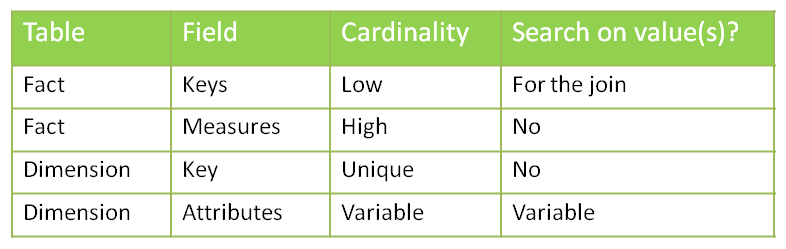

On Which Tables/Fields Shall We Use Which Indexes?

Can we put indexes everywhere? No. Indexes come with costs (creation time, update time, storage) and should be created only when necessary.

Remember also that the goal is to avoid full table reads – if the table is small, then the Oracle optimizer will decide to read the whole table anyway. So we don’t need to create indexes on small tables. I can already hear you asking: “What is a small table?” A million-row table definitely is not small. A 50-row table definitely is small. A 4532-row table? I´m not sure. Lets run some tests and find out.

Before deciding about where we shall use indexes, let’s analyze our typical star schema with one fact table and multiple dimensions.

Let’s start by looking at the cardinality column. We have one case of uniqueness: the primary keys of the dimension tables. In that case, you may want to use a b-tree index to enforce the uniqueness. However, if you consider that the ETL preparing the dimension tables already made sure that dimension keys are unique, you may skip this index (it’s all about your ETL and how much your trust it).

We then have a case of high cardinality: the measures in the fact table. One of the main questions to ask when deciding whether or not to apply an index is: “Is anyone going to search a specific value in this column?” In this example I´ve developed I assume that no one is interested in knowing which account has a value of 43453.12. So no need for an index here.

What about the attributes in the dimension? The answer is “it depends”. Are the users going to do searches on column X? Then you want an index. You’ll choose the type based on the cardinality: bitmap index for low cardinality, b-tree for high cardinality.

Concerning the dimension keys in the fact table, is anyone going to perform a search on them? Not directly (no filters by dimension keys!) but indirectly, yes. Every query which joins a fact table with one or more dimension tables looks for specific dimension keys in the fact table. We have got two options to handle that: putting a bitmap key on every column, or using bitmap join keys.

Further Inquiries...

Are indexes that effective? And what about the storage needed? And the time needed for constructing/ refreshing the indexes?

We will talk about that next week on the second part of my post.

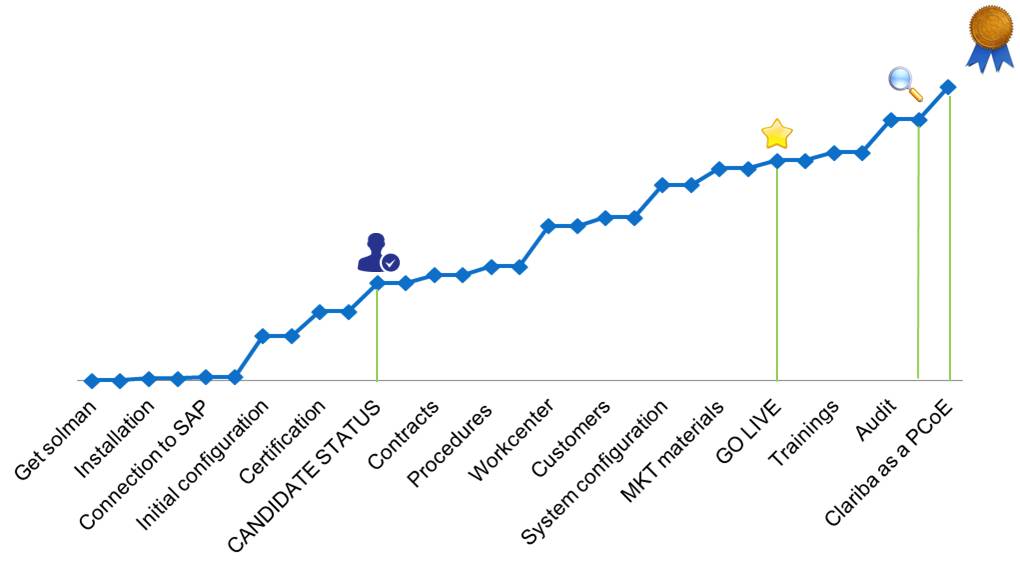

Clariba Obtains the SAP Partner Center of Expertise Certification

SAP service and support audit confirms that Clariba can support its customers in accordance with SAP’s current technical and organizational standards, thereby receiving the Partner Center of Expertise (PCoE) Certification.

On the 30th of May Clariba employees received the amazing news – They had passed the final audit and SAP was granting Clariba Support Center with the PCoE certification.

This was the finishing line of a process that started in 2011 and took a lot of effort from Clariba Support Center members, going from the installation of SAP Solution Manager (the system used to provide support), through certifying support center staff, creating all marketing materials and finally undergoing a 6-hour audit to evaluate if all requirements were in place.

“It’s been a very long and complex process, since it was our first experience with SAP native applications. Installing and configuring SAP Solution Manager has been a big challenge, but now it allows us to provide full SAP Enterprise Support to our customers. We use SAP Solution Manager to monitor our customer installations remotely as well as connect them with the resourceful SAP back-end infrastructure. In addition, SAP Solution Manager provides our support customers with a Service Desk available 24x7, to create and track their incidents and to ensure a fast and efficient service for very critical issues at any time” says Carolina Delgado, support manager at Clariba.

The Partner Center of Expertise (PCoE) certification affirms that the defined procedures, guidelines and certified support team members are available to provide qualified, timely and reliable support services for SAP BI Solutions.

“As a requirement for achieving the PCoE certification, all our support consultants have the Support Associate - Incident Management in SAP BusinessObjects certification and as a commitment to high quality and professionalism, we require all our consultants to be certified in the SAP BusinessObjects suite. Both accreditations ensure that our support staff has the required knowledge to efficiently address and resolve any technical issue that may arise. In addition, they can draw from a wide knowledge base that comes from many years of experience on SAP BusinessObjects installation and development as well as on a continuous interaction with the SAP community" mentions Carolina.

With this in place, Clariba Support Center can offer VAR delivered support to its clients, providing the knowledge and support tools for SAP BusinessObjects licenses through a single point of contact ─ a familiar, agile and available partner, ensuring that a defined and known point of contact is always available to customers in the event that any problems arise in their SAP BusinessObjects systems.

Carolina points out that the Key benefit is the proximity with customers and their environments. “In most cases we are maintaining BI systems which have been deployed by Clariba itself. This gives us the advantage of better understanding the customer's environment, so that we can provide a more accurate and tailored service.”

As a SAP Partner Center of Expertise (PCoE), Clariba can offer their clients

a fully qualified staff according to SAP quality standards;

real-life SAP BusinessObjects implementation, training and support experience;

a relationship with the client organization built on trust and past successes;detailed knowledge of the organization’s history, systems and business processes when the BI system has been deployed by Clariba;

staff trained for rapid issue resolution and focused on customer satisfaction;

very strong cooperation and relationship with SAP experts worldwide and an integrated support platform that ensures the best quality of service.

For more information on Clariba´s Support Center offerings visit http://www.clariba.com/bi-services/support.php

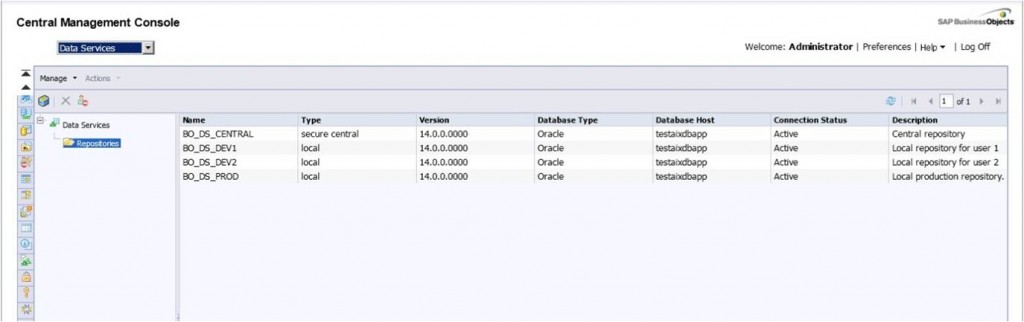

Working with Data Services 4.0 repositories

On my previous blog, “Installing Data Services 4.0 in a distributed environment” I mentioned there was an important step to be carried out after installing data services in a distributed environment: configure the repositories. As promised, I will now walk you through this process. Data Services 4.0 can now be managed using the BI Platform for security and administration. This puts all of the security for Data Services in one place, instead of being fragmented across the various repositories. It means that Data Services repositories are managed through the Central Management Console. With Data Services 4.0 you will be able to set rights on individual repositories just like you would with any other object in the SAP BusinessObjects platform. What is particularly interesting about managing Data Services security using CMC is that you can assign rights to the different Data services repositories.

Now you can log into Data Services Designer or Management Console with your SAP BusinessObjects user ID instead of needing to enter database credentials. Once you log into Designer, you are presented with a simple list of Data Services repositories to choose from.

I advice anyone who is beginning to experiment with Data Services to use the repositories properly from the start! At first it might seem really tough, but it will prove to be very useful. SAP BusinessObjects Data Services solutions are built over 3 different types of meta-data repositories called central, local and profile repository. In this article I am going to show you how to configure and use the central and the local repository.

The local repositories can be used by the individual ETL developers to store the meta-data pertaining to their ETL codes, the central repository is used to "check in" the individual work and maintain a single version of truth for the configuration items. This “check in” action allows you to have a version history from which you can recover older versions in case you need it.

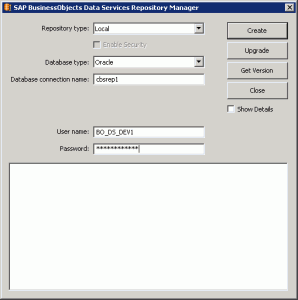

Now lets see how to configure the local and central repository in order to finish the Data Services’ installation.

So, let’s start with the local repository. First of all, go to Start Menu and start Data Service Repository Manager Tool.

Choose “Local” in the repository type combo box. Then, enter the information to configure the meta-data of the Local Repository.

For the central repository chose central as repository type and then enter the information to configure the meta-data of the Central Repository. Check the check box “Enable Security”.

After filling in the information, press Get Version in order to know if there is connection with the data base. If there is connection established, press Create.

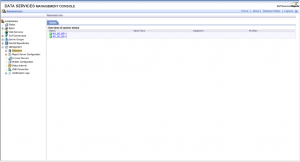

Once you have created the repositories you have to add them into the Data Service Management Console. To do that log in DS Management Console. You will see the screen below.

This error is telling you that you have to register the repositories. The next steps is to click on Administrator to register the repositories.

On the left pane, click on Management list item. Then click on Repositories and you will see that there are no repositories registered on it. In order to register them, click the Add button to register a repository in management console.

Finally, write the repository information and click Test to check the connection. If the connection is successful, click apply and then you will be able to see the following image which contains the repositories that you have created. DO NOT forget to register the central repository.

After that, the next logical step is to try to access Data Services using one of the local repositories. Once inside the Designer, activate the central repository that you have created. Data services will display an error telling you that you do not have enough privileges to activate it.

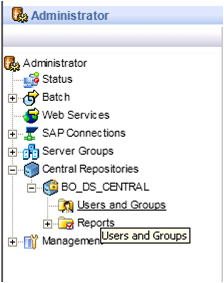

To be able to active the central repository you have to assign the security. To do that go to Data Services Management Console. In the left pane go to “Central Repository” and click on “users and groups”.

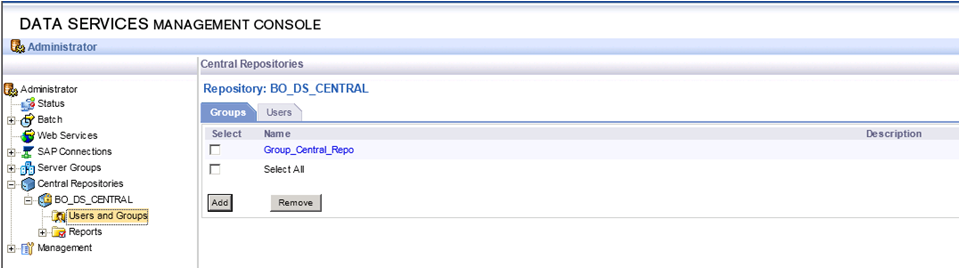

Once you are inside Users and Groups, click “add” and create a new group. When the group is created select it and click on the “users” tab which is on the right of the group tab (see the image below).

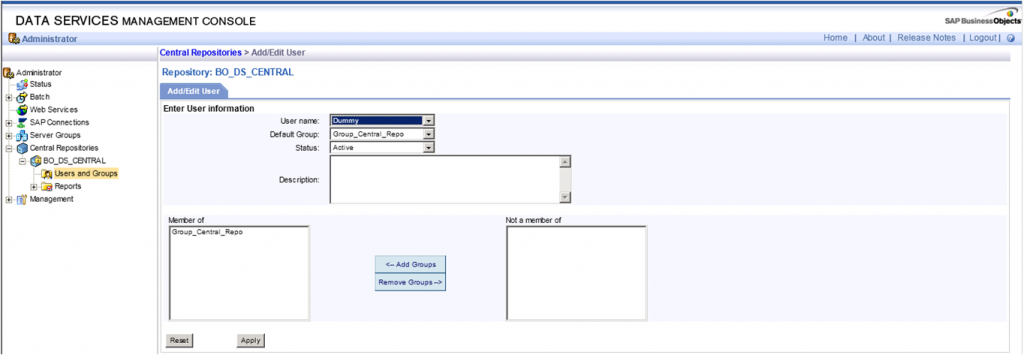

Now you can add the names of the users that can activate and use the central repository in the designer tool. As you can see in the image below the only user that you added in the example is the “Administrator”.

Now the last task is to configure the Job Server to finish the configuration.

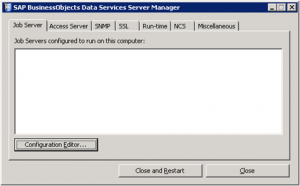

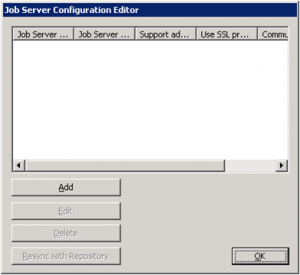

As always, go to Start Menu and start Server Manager Tool. Once you start the Server Manager the window below appears.

Press Configuration Editor a new window will be opened. Press Add and you will have a new job server.

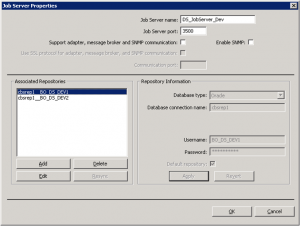

Keep the default port and choose a name or keep the default one for the new Job server. Then press Add to associate the repositories with the job server created.

Once you press “Add” you will be able to add information in the right part of the window “Repository Information”. Add the information of the local repositories that you have created in the previous steps.

At the end you will see two local repositories associated.

With this step you have already finished the repository configuration and are now able to manage the security from the CMC and use the regular BO users to log into the designer and add security to the Data Services repositories like you do with every BusinessObjects application.

If you have any questions or other tips, share it with us by leaving a comment below.

SMEs Run SAP – Myth 4: SAP is not Agile Enough

The 4th and last post on our SAP Myth busting series, this article intends to show how SMEs can meet their need for speed with SAP.A bigger business is a better business. It’s a common assumption, but it’s not strictly true. Small to medium-sized businesses (SMEs) have a number of advantages over their larger counterparts. Their size is often their strength – it makes them agile. In fact, most SMEs we speak to pride themselves on this. They can respond faster to changes in the market and take advantage of new insight and opportunities faster than larger organizations.

To capitalize on their agility, SMEs need systems that play to this strength. Unfortunately, some SMEs get bogged down by manual processes. They rely on off-the-shelf software that doesn’t quite meet their needs and depend on spreadsheets for their business intelligence. All these things slow their businesses down and mean they’re more likely to miss opportunities. In this environment, executives often resort to making important decisions on a gut feeling rather than the latest data.

That’s why it’s always a surprise to hear an SME wondering if SAP software will slow them down. Maybe the perception is that SAP software is only for enterprise organizations, but this couldn’t be further from the truth. It’s another business myth. Rather than slow these businesses down, SAP helps them become more agile and able to capitalize on opportunities faster.

Clariba has helped companies streamline their internal reporting processes, arriving in a particular case to an 80% reduction in the time it took to produce monthly internal reports. We also allow organizations reduce reliance on manual data, improving reporting accuracy, reducing errors and promoting decisions based on solid information rather than just gut feeling.

More importantly, we have the expertise to speed up the implementation process, so that your company can start enjoying the benefits of an SAP BusinessObjects Business Intelligence solutions in a short time frame. Furthermore, if you are worried about the impact the change will have over your employees, Clariba has best-in-class trainers, with years of experience, ready to help your employees make the transition from a spreadsheet based organization to the innovative and integrated information hub they will soon benefit from.

Contact us to discover how much SAP and Clariba can deliver to your company.