Let’s face it, an SSO implementation is not an easy task. Even though the steps are clear to us as BI consultants, there is always the chance that something is missing or that we need to apply changes to configurations due to differences between the real environment and the manual. It is also very common not to detect such differences, as there are multiple types of environments and multiple configurations that may differ from the guides.

The purpose of this post is not to provide another guide for AD+SSO implementation, but rather to offer a checklist that you can go through when the implementation is not successful and the SSO with AD is not working as desired. This list can also be helpful when performing the task, as it is highly recommended to test all the steps during the procedure.

Steps 1 to 4 are common validations which will allow you to fix errors that can be difficult to detect. They are related to the Active Directory Server tasks and likely need to be double checked as they are usually performed by other people (i.e. the AD maintenance team). Best practice suggests that you should plan to check every single task, especially the ones that are not performed by you or your team.

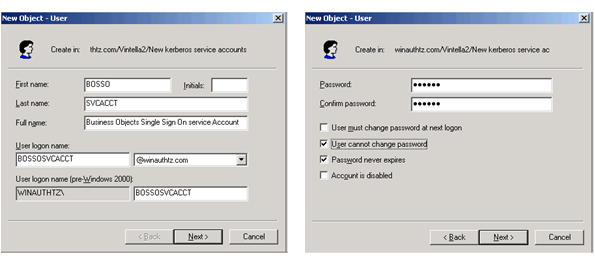

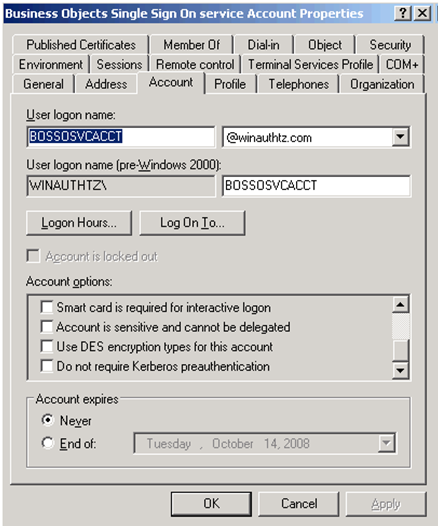

- Test the service account for Kerberos delegation -> verify that the password of the account is set to “Password never expires”.

- Encryption to use for the account -> RC4 is used when DES is not selected. For SAP BusinessObjects implementations that are under XI 3.x, RC4 is preferred since it comes with the JDK 1.5 version. On earlier versions (i.e XIR2 with java SDK 1.4.2), RC4 may not work without updating the JDK to 1.5.

- Verify the creation of the default SPN -> run a “setspn –l” on the service account and check the output. Setspn –l should be something like this:

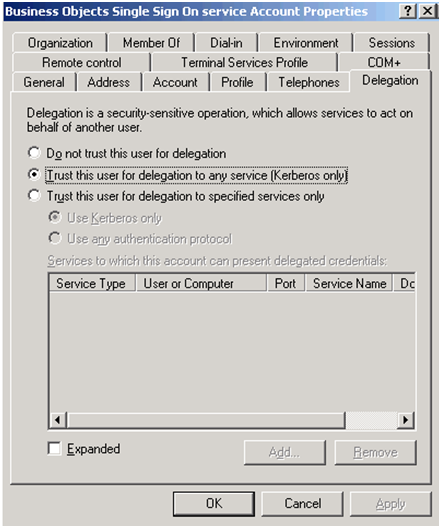

- Verify the delegation is enabled on the vintela SSO account -> Look for a checkbox “Trust this user for delegation to any service (Kerberos only)”.

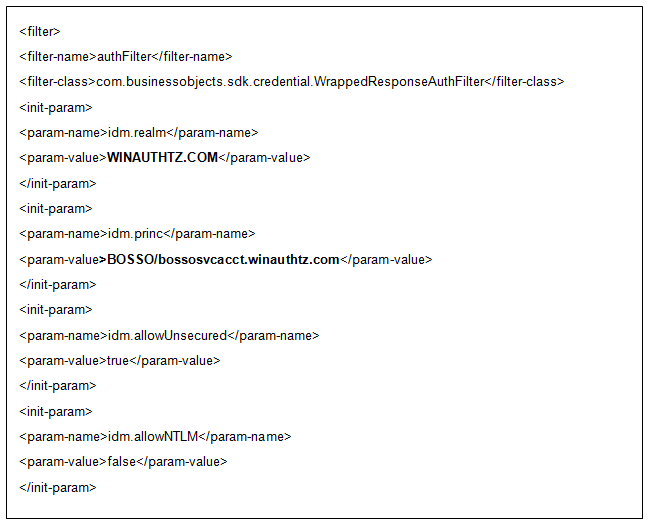

- In the BusinessObjects server, double check files web.xml and server.xml -> Review lines added or modified and, if possible, redo it maintaining a copy of the original ones. Some of the validations are: a)Server.xml -> Increase the default HTTP Header. Normally it is set to 16384 but if your AD contains users that are members of a lot of groups (50 or more), you may need to increase the. b)Web.xml -> Change the authentication default to secWinAD when using SSO. Then remember that siteminder must be set to false and vintela to true. Remove the comments from the auth filter. After that, set the idm.realm to your default REALM (must be in capital letters). And also set your idm.princ to the default SPN. These three last steps, are shown as follows:

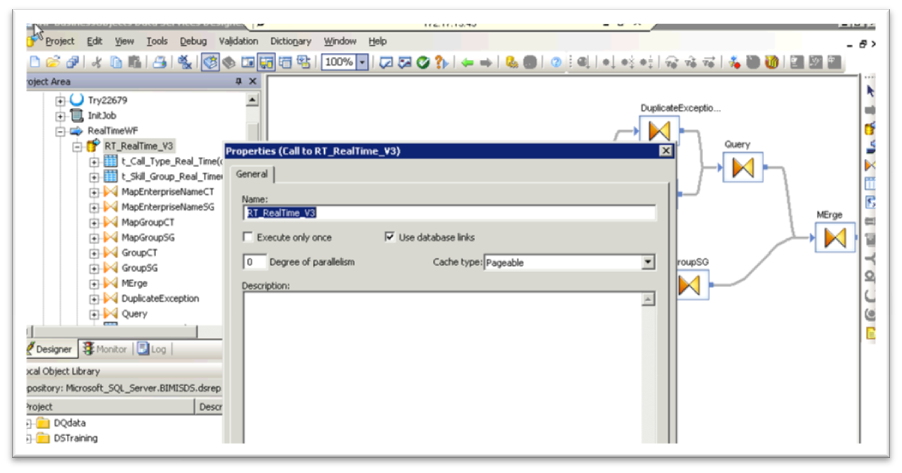

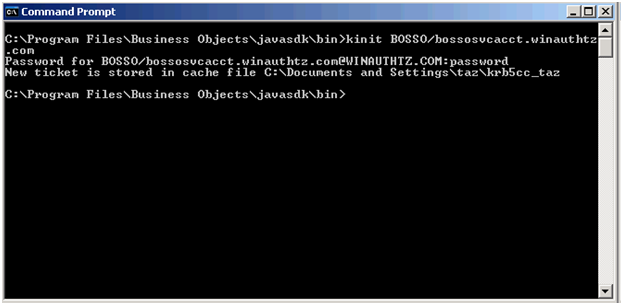

- Verify vintela filter has been successfully loaded -> to do that, remove all logs in the Tomcat folder after stopping the service and restarting it again. Then search in the stdout file for the credentials obtained. If the credentials are obtained, the vintela filter is being loaded successfully. If the credentials are not obtained, you may run the kinit and check the output as the following image shows:

If you have solved your problems by following the points in this post, congratulations! If not, don’t give up, keep on searching in different forums, trace tomcat (there are several configurations you can add on the console), scan packets from the clients related to SSO issues, or ask us for guidance. In the worst case scenario you may need to redo the implementation from scratch. Whatever the case may be, we’re sure that in the end you will succeed!