This post is about SAP BusinessObjects Dashboards (formerly known as Xcelsius) and its intricate form of work. If you are an assiduous dashboard developer or just beginning to work with the tool, you will notice some bugs that interfere with your developing and slows you down. My main goal is to talk about the bugs or "misfortunes" that I have commonly faced in SAP BO Dashboards (Xcelsius) and the workarounds that I have found to save you some time when working with this tool. My second objective is to open a discussion where you can comment on other SAP Dashboards issues and solutions you found.

Dashboard integration in SAP Crystal Reports

In this blog article I would like to share with you how to embed a dashboard in a Crystal Report using flash variables. First of all let’s give a scenario that leads us to do that. In this case we wanted to create a dashboard for a SAP GRC module. The problem was that we could not connect to the system directly with SAP BusinessObjects Dashboards (Xcelsius for the most nostalgic ones). Apart from that, there is a good thing about having a dashboard embedded in Crystal, you will have a dashboard that can be refreshed from Crystal Reports without needing a previous authentication. You will also be able to save the “report” (you can show the dashboard) in PDF with saved data and the dashboard will be completely clickable and navigable.

How to load and read Web Services Data Store in Data Integrator

On this article I will teach you in 12 steps how to load and read the information retrieved by a WebService based on a Java Application as a source of information. This is has a very important feature if for example you are building Java Social Media applications that read information from the Internet or if you have constructed a Java application that retrieves information in Json Structure XML. I will show you how Data Services makes requests and interprets replies from a web service Data Source.

If you need background information on the first steps of my process, I have done a first post on how to use Data Services SDK libraries to construct an AWTableMetadata in a Java application, followed by the post where I explained how to access a JAVA application as a source of information using the WebService DataStore in SAP Data Services.

If you already read my previous blogs, lets jump into how to load and read Web Services Data Store in Data Integrator.

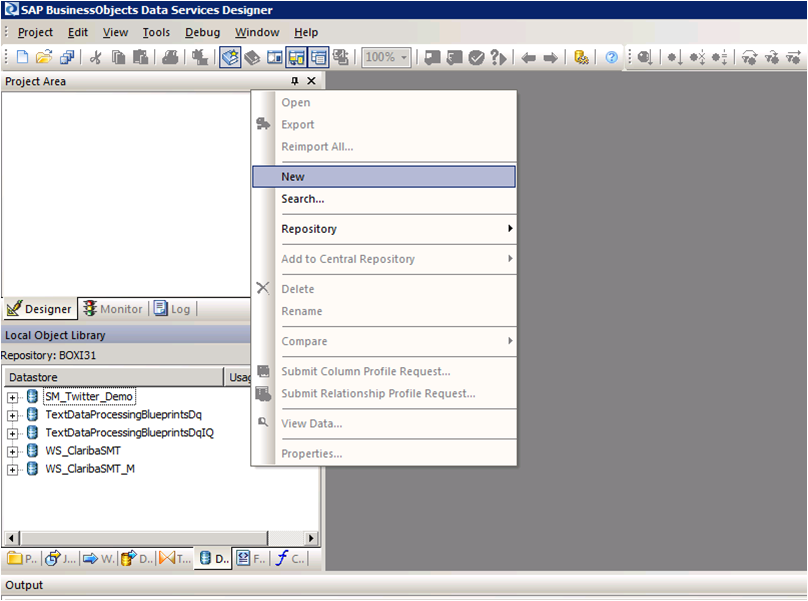

Step 1:

Open Data Services Designer. Go to the Data Store perspective and right click with the mouse and select New.

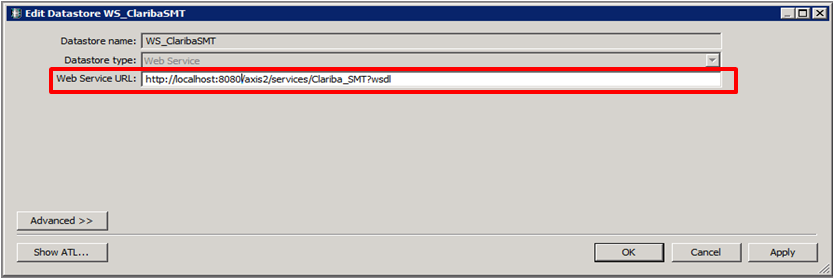

Step 2:

Set the name of the extractor and the URL where your web service WSDL is located (see my previous blog for reference).

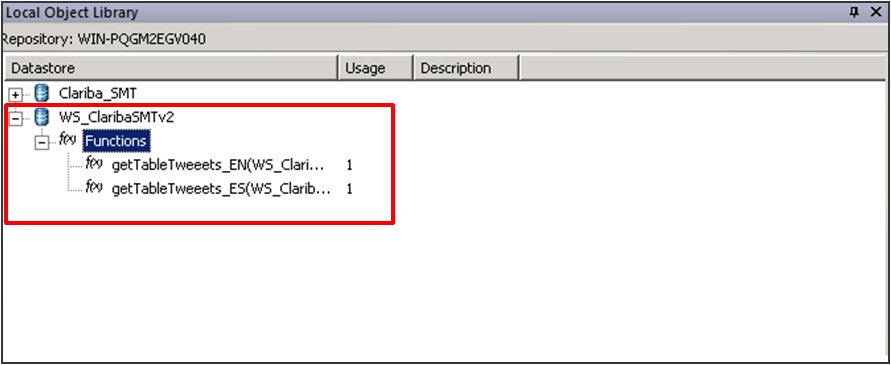

Step 3:

Right Click on the “f(x)” symbol and select Import. Choose the functions from the webservice that you are going to use. In this example we select “getTableTweeetsEN” and “getTableTweeetsES”.

Special Note:

To access to these functions inside a transformation we have to use the function call Schema provided by Data Services. In this case the function getTableTweets_EN receive an input and returns a table (AWTableMetadata table). This return type comes in an especial nested form from our Web Service. We will have to resolve this nested schema doing a couple of transformations below.

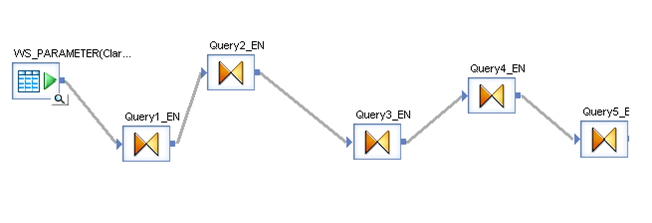

Step 4:

Select the input parameter for the functions; in this case it is a field from a table called “WS_Parameter”. We select that table as a Source table and our first item in our data flow.

Step 5:

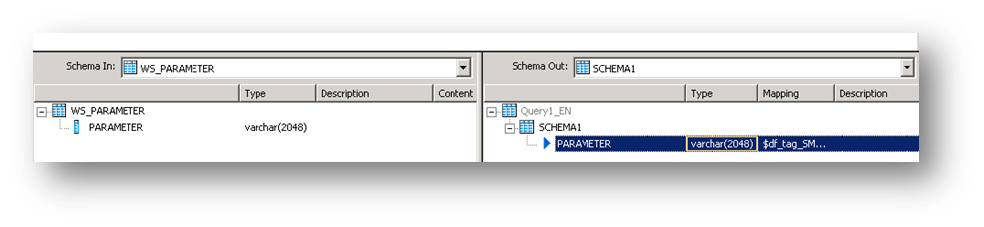

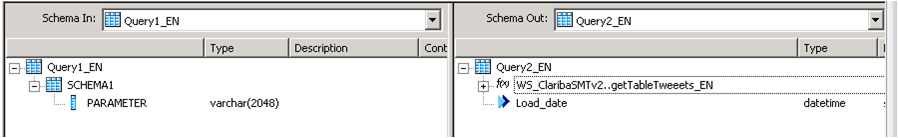

Insert a transformation in the data flow as your second item. In this first query (Query1_EN). We create a SCHEMA called Schema 1, and assign the field came from the database “Parameter” as an attribute of this Schema.

Step 6:

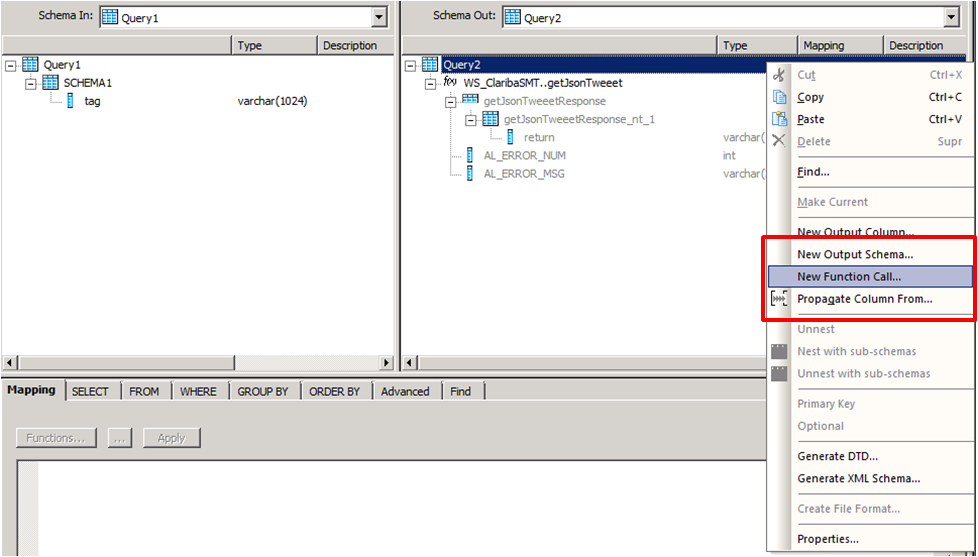

Create a second transformation (Query2_EN). This query will be in charge of calling the web service with the input parameter using the Function Call procedure. Right click on the Schema Table called Query2 and select new Function Call.

Step 7:

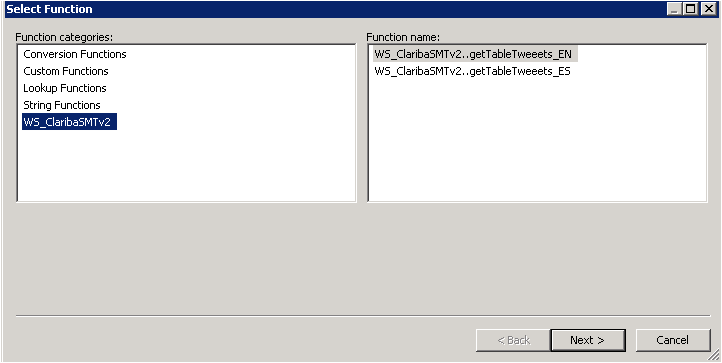

Select the WS_ClaribaSMT dataSotre in the left panel, the right panel shows the functions that we imported to the Data Store. We select the first one getJsonTweet (For English Language) and click next.

Step 8:

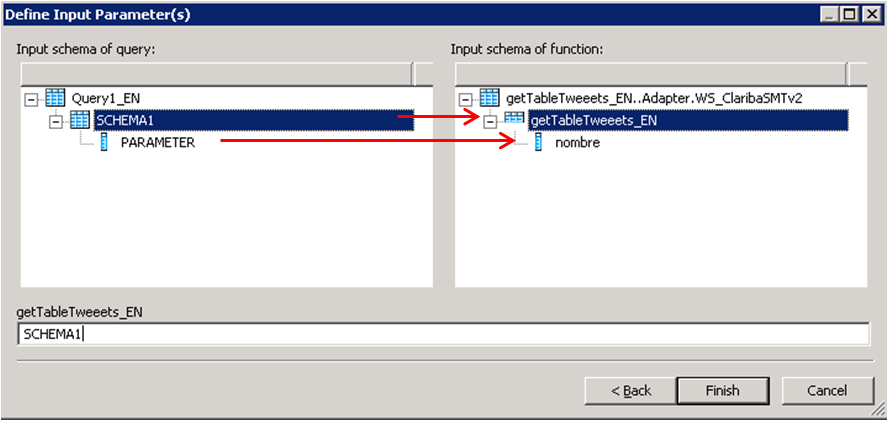

We have to map the new function call Schema with the new Schema1. This is the structure used to call a Web Service in Data Services. In this case we are calling the function getJsonTweets_EN with a parameter nombre. Structure that matches our SHEMA1. Then click Finish.

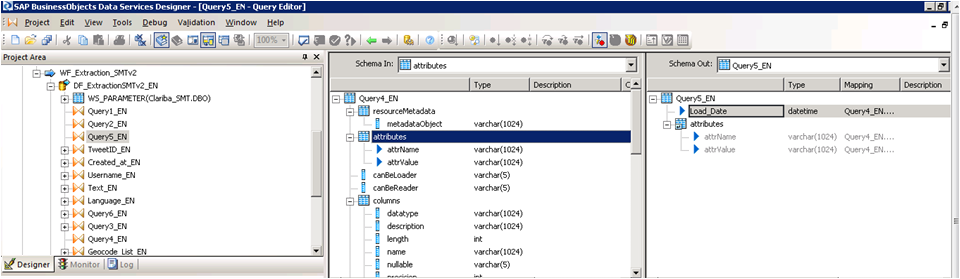

The final result will contain the function call. You can add also an attribute below the function call. In this case we add “load_date” containing the sysdate representing the date of the load data.

Step 9:

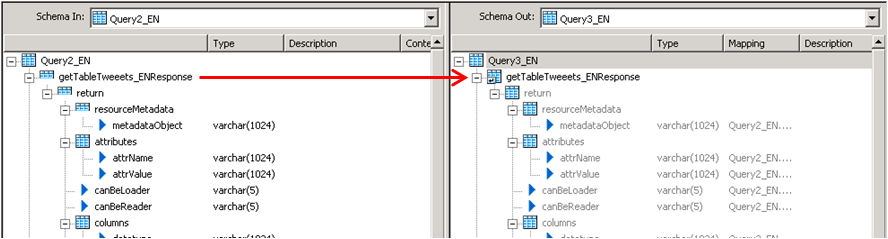

The third query will be in charge of the recognition of the data returned by the Web service. In this case the Schema is in the left panel. To capture this on Data integrator we need to unlace this Schema until we get to the “return object” which contains the Data.

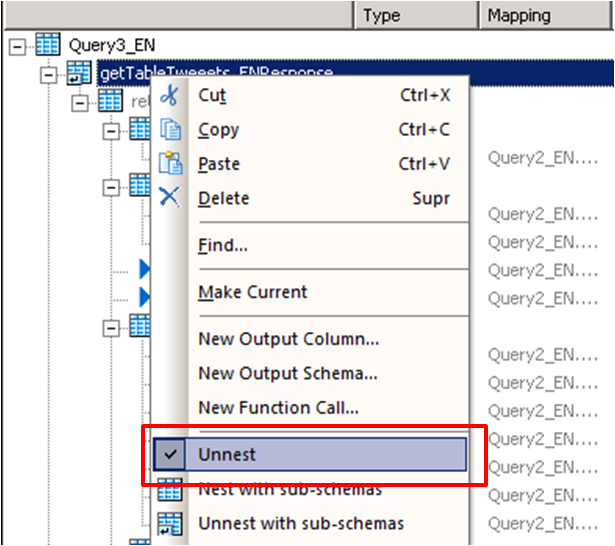

We click on the left panel above the getJsonTweetResponse and drag it into the right panel. Then we do right click on the getJsonTweetResponse from the right panel and select the option “Unnest”. This will cause the split between the schemas. We proceed to capture it in the next nested query.

Step 10:

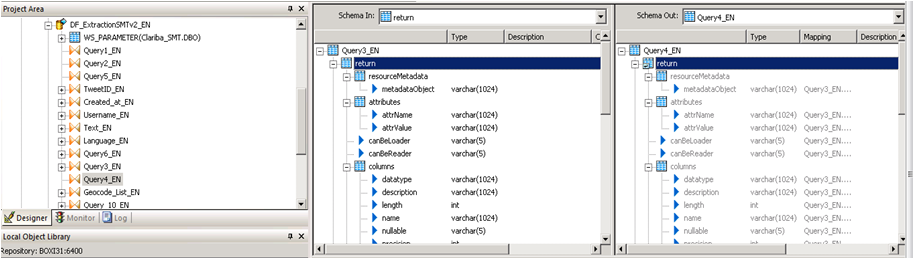

We do the same procedure in the query 4, drag the getJsonTweetResponse to the right and unnest it.

Step 11:

Query 5_EN contains the final result which be two variables that contains the header of the table plus the Load Date.

Step 12:

The last step depends on the implementation and the business rules. The table returned will have this format.

Column1

Value 1

Column 2

Value 2

Column N...

Value N…

Conclusion

This method applies particularly if you are using function call schema and an array as return type for your web service. If your source is another thing different to an application the resolution of the web Service may vary. The method for mapping the final table is up to you and your business needs. A easy solution could be aggregate an ID to each row.

If you want to have more information please read my previous blogs or leave a comment below.

Spicing up your Dashboard with a clickable moving Ticker

Looking forward to add a little more to your visualizations? Spice them up with a clickable moving ticker! For those who are not familiar with Dashboard Design (formerly known as Xcelsius), a moving ticker is a banner which has a similar look to a stock market ticker displaying customized moving labels from right to left. The one described here is also clickable, which means that when you click on any label it can execute many actions such as opening URL’s.

We always try to build dashboards that people really use, and for that we need to find a balance between functionality and design. The design might not seem as important as the functionality, but trust me, in order to get the attention of users you need to build something that really catches their eyes, such as this ticker feature which is easily noticeable to do it´s constant movement.

Step by step process

In order to help you make your Dashboards eye-catching, I am going to show you how to build a clickable ticker to open URL’s with the following steps.

Let’s start by organizing our spreadsheet (find example below – Fig.1) with the following information:

- Labels: Information that will be displayed on the ticker

- URLs: Links that will be opened when clicking on the labels

- Auxiliary info: cells containing Index, destination, status, key, URL to open, which will be explained later on

When your spreadsheet is ready follow these steps:

1) Drag and Drop the ticker object to your canvas.

The ticker object can be found under the category “Selector”.

2) Configure the Ticker object’s properties.

In the General tab, assign the labels you would like to show on the dashboard.

Insertion type: Position

Destination: This cell is key as it will give the position number of the clicked label on the ticker.

e.g: If you click the third label of the ticker this cell will be a “3”, it it will change when you click another label.

3) Drag and Drop a URL object to your Canvas.

The URL object can be found under the category “Web Conectivity”

4) Configure the URL object’s properties and behavior.

URL: In this cell you need to build a “vlookup” formula as it is shown in fig.1.

In the behavior tab under the Trigger Behavior properties you find:

Trigger cell: This is going to be the same as the destination cell of the Ticker (Sheet1!D$4 in this case – Fig 2.).

Check the “When Value Changes” option.

Hide this button by selecting different values for the status and key cells as below:

The outcome and conclusions

After completing these steps you should have built a clickable moving ticker which will spice up your visualization.

This solution will allow you to:

- Open Intranet/Internet URL’s from moving labels.

- Change visibility dynamically for graphs and images from you Dashboard Design visualization.

- Enhance the design and gain visibility of your visualizations

I hope this feature is useful to you and it brings positive feedback from your end users. Please feel free to leave a comment or question below.

Introduction to CMC Monitoring in SAP BO4 SP4

A few months ago a client asked me about how to be aware about problems (disconnections) in the different servers and services of their SAP BO4 SP4 without needing a person to constantly check the status. I then started to research about the monitoring feature in the CMC. The truth is that there isn't too much information about it in the documentation, just some pages in the Platform Administrator Guide, or posts or references to this topic around the web. With this post, I will try to compile all this information I have found and will explain the use of these interesting features and give you a brief introduction to CMC Monitoring.

First of all, let me explain what is CMC monitoring: Monitoring is a new feature of the CMC that appears with SAP BusinessObjects 4 and allows Administrators to see the use and health of the BO system. For this, it uses watches, probes, metrics and alerts. There are some default ones, which allow the Administrator to start playing around with monitoring without a huge knowledge on the topic and obtain really useful information about the status of the system. Before using this feature we need to know the actions we must perform and the existing limitations.

Also, we must ensure the information for monitoring is stored in the Monitoring database. This is a Derby database and it works independent from the other two DBs in SAP BusinessObjects. To activate the Monitoring database we need to log into the CMC, and go to Applications, and double click on the Monitoring Application. This will open the Properties window of the Monitoring Application. Make sure that “Enable Monitoring Application” is checked. Click Save and Close. The final step is to restart the Adaptive Processing Server (APS) if it was not checked. The information in the DB is deleted every time we reach 1GB of information. We can increase that size in the CMC.

Monitoring

To reach the monitoring section in the CMC, we need to go to Applications > Monitoring

As we can see in the above image, there are 5 different tabs in this section: Dashboards, Metrics, Watchlists, Probes and Alerts. Let's run through each one:

1. Dashboards

it is the default tab and as the name says it is a dashboard which is divided in 5 parts:

- Overall Health: It is an icon showing if the BO is working correctly or not.

- Recent Alerts: Here we can see the alerts triggered the last 24 hours by the system.

- Graphical view of BI Landscape: this panel includes a graphical or tabular view of the Watches within the system .We can drill down click in them.

- KPIs Status: This part displays three Key Performance Indicators for the system. We also can drill down clicking on them.

- Deployment Level Metrics: we can see 3 interesting metrics as the running jobs, the users and pending jobs.

2. Metrics

Metrics are individual pieces of information that contribute to know the health of the different components of the system.

Metrics are used to measure the health of a component. The metrics we define will depend on the components we are monitoring and our requirements. Examples of metrics are user login time, query execution time, CPU usage percentage, availability status for a system service, etc. We can use them in Watches and Alerts, setting thresholds for Warning and Danger alerts. There are around 250 predefined metrics. Furthermore, we can create our own metrics if we think they are necessary. Normally, with the default ones, we can check the status of almost everything in the system.

If we select a metric, we can observe the historical and current status of it.

3. Watchlists

This tab lets us monitoring the watches that we have created. The watches are thresholds for metrics that show us if the metric is on a healthy, warning or in danger status.

Again, we have created watches by default. We can change these thresholds to adapt them to our system. Also, we can create notifications to the watches, hence, we can ask the watch to send an email if the system is in danger, for example. There are a lot of interesting options with the watches. I encourage you to try them by yourselves.

Finally, we can decide which are the watches that we want to see in the dashboard tab.

4. Probes

They provide us the ability to monitor our SAP BusinessObjects system, using simulated application work-flows which are run through SDK-based scripts.

This is the most interesting part of the CMC monitoring but, at the same time, the most difficult. As we can create probes with a SDK, the power of this tab is huge. We could create whatever we want, but it is not very easy to create these probes. There are not examples around the net or they are very difficult to find. (I will try to add another entry in this blog in the future with an example).

As I mentioned before, the system will provide default probes. We can choose among 9, which are:

- CMS Logon Logoff probe: Monitors if a user can successfully log on to the SAP BusinessObjects system and how long does it take.

- Crystal Reports service through Page and Cache Server probe: Monitors the availability and performance of the Crystal Reports service (through the page and cache servers).

- Crystal Reports service through Report Application Server probe: Monitors the availability and performance of the Crystal Reports service (through RAS).

- Infoview Probe: Monitors the availability and performance of logon/logoff the InfoView web application.

- Web Intelligence Service Monitoring probe: Tests the availability and performance of the Web Intelligence Service through the report servers.

- CMS Ping probe: Tests the core functionality of the CMS.

- CMS Cache probe: Tests the availability of the CMS cache.

- CMS Database Connection probe: Tests the connection to the CMS repository.

- Start and Stop Servers probe: Tests to start and stop the different servers of the system.

5. Alerts

Here is where all the Alerts that we have set up in the Watchlist will appear.

This is a list of the alerts that the system raises. We can see the different details of the alerts when clicking on them. These alerts also can send emails and perform actions, depending on what we have set in the watchlist.

How to use all in one

With all the above information, I have explained an overview of the different tabs of the monitoring and also what we can do with each one of them.

If we want to tap the full potential, it is easy to see that all the tabs are connected and we need to use them all. One nice example could be monitoring the response time to open a report in the system. For this, we use the probe "Web Intelligence Service Monitoring" that logs in and we try to open and refresh a report in Web Intelligence. After this, we use the metric of the time that takes to the system to execute the probe and the metric that says if the probe was successful or not. Also, we create a whatchlist with this metric and if the probes takes more than 5 seconds, it will send an email advising the Administrator that the system is working a bit slow and if the probe fails or takes more than 15 seconds, it will send another email advising that the system is not working properly. We can schedule this probe to be executed on a daily basis.

With this simple scenario we are able to detect any problems in the system automatically, before any user reports it. Moreover, the tabs' alerts will show us if there is something wrong that we had not noticed before.

Conclusion

As a conclusion, we can see that by playing around with all of these features, we can accomplish really interesting things and get most everything under control in our BO system. It could appear difficult to learn, but there are a lot of things that we can do just with the default settings. After you get used to them, it is time to play with the power of the self-created probes and add more personalized options to our monitoring.

I hope this introduction to monitoring has helped you to understand a bit more how CMC Monitoring works in SAP BO 4 SP4.

If you have any questions or suggestions, please leave a comment below.

Providing SSM data to SAP BusinessObjects reporting tools - Exhausting all scenarios

In the last two months I have been in a couple of customers who were interested in the integration of SAP Strategy Management 10.0 with SAP BusinessObjects BI Platform 4.0. In this post I would like to explain what that integration is about.

First of all, a brief introduction about the solution. The SAP Strategy Management (SSM) software allows aligning the Strategy Plan of the company and its key objectives and spread it across all the organization. It is an out of the box Enterprise Performance Management solution in which you can insert the most important KPIs of your company in Balanced Scorecards or Strategy Maps and control their performance in relation to the Strategy of the company. Within SSM you can also create initiatives to implement improvements or corrective actions and link them to the objectives or KPIs.

Sometimes the customer has requirements to implement reports or further analysis on top of SSM and these cannot be covered with the standard functionality of the solution. In that case, the best option is to implement the integration between SSM and SAP BusinessObects reporting tools. Some examples of what you can do with that integration:

- Implement bespoke Dashboards with your SSM KPIs and Objectives

- Implement universes and allow users to exploit information from SSM with Web Intelligence without consuming additional SSM licenses

- Implement pixel-perfect Crystal Reports on top of the SSM

- Foster ownership with Publications. For example, send a list of KPI’s that are not performing well to their responsible users

For implementing such integration, you can follow the SAP SSM Configuration guide. However, in some cases, there is lack of documentation and you have to do things outside the script. In other cases, the existing documentation has not been updated yet to BI 4.0 and SSM 10.0. And finally, sometimes the documentation is wrong or the software has bugs and you cannot setup the integration.

In this post we will analyze following scenarios:

- Web Intelligence reporting on top of a SSM Models

- Web Intelligence reporting on top of a SSM Data Model (Clariba-developed solution)

- Crystal Reports on top of SSM (exploring different options)

- Dashboards on top of SSM (exploring different options)

These scenarios have been implemented with following software components:

- SAP NetWeaver 7.3 SP08

- SAP SSM 10.0 SP06

- SAP BusinessObjects BI Platform 4.0 SP05

- Crystal Reports 2011 SP05

- Dashboards 4.0 SP05

1. Web Intelligence reporting on top of a SSM Model

As per SAP documentation, we can setup the ODBO Provider in order to build a Universe on top of SSM Models. The problem is we still have to use the Universe Designer instead of the Information Design Tool of the BI 4.0. Bellow are the steps for setting up the ODBO Provider and implementing your first report on top of the SSM:

- Go to your BO 4.0 server and make sure you have a Multimensional Analysis Service in the BO server. Stop the MDAS Server and the Connection Server in the Central Configuration Manager

- Copy the ODBOProvider folder from the <drive>:Program files (x86)SAP BusinessObjectsStrategy ManagementInternetPub path of your SSM server to the BO server

- In the SSM server, run the SSMProviderReg.bat file in the BO server. Make sure you have administrator rights in the BO server. Once completed, check in the regedit that you are able to find the register SSMProvider.1 in the Windows register

- Modify the windows register to insert following string in that path (assuming that you are using a 64-bit Windows): HKEY_LOCAL_MACHINESOFTWAREWow6432NodeSAPSSMODBOProvider "servletUri"="/strategyServer/ODBOProviderServlet"

- Access the following path in BO server: <drive>:Program Files (x86)SAP BusinessObjectsSAP BusinessObjects Enterprise XI 4.0dataAccessconnectionServeroledb_olap

<DataBase Active="Yes" Name="Strategy Management 10.0">

<Aliases>

<Alias>Strategy Management 10.0</Alias>

</Aliases>

<Library>dbd_sqlsrvas</Library>

<Parameter Name="Family">SAP BusinessObjects</Parameter>

<Parameter Name="Extensions">sqlsrv_as2005,sqlsrv_as,oledb_olap</Parameter>

<Parameter Name="MSOlap CLSID">SSMProvider.1</Parameter>

</DataBase>

- Start the MDAS Server and the Connection Server

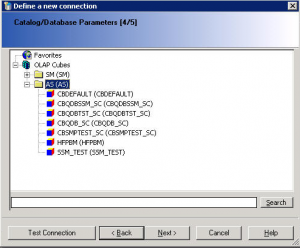

- Now we can go to the Universe Designer and start implementing a Universe on top of our Model. The first step is defining the connection. In the connection list (retrieved from the Connection Server) we can see now the new register we have inserted, Strategy Management 10.0:

- Define the connection parameters. You must be an SSM user with proper permissions and you must inform the server's complete address (FQDN) and its port.

- Once connected to the SSM server, you will see the list of available Cubes. The AS category allows access to the measures (based on attributes and dimensions) in the Application Server model.The SM Adapter allows access to the strategy dimension, which represents the strategy management dimensions Scorecard and Initiative. Scorecard detail not relating to the KPI such as comments are not presented.

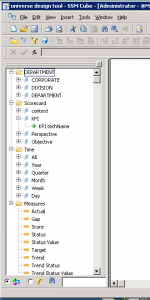

- And finally we will see our universe with the available dimensions, the standard classes (Time and Scorecards) and the measures. You can display the technical names of the objects as detail or you can define hierarchies of Perspectives, Objectives and KPIs.

- Now we can publish the universe and go to the Web Intelligence to start implementing our reports on top of the SSM models.

2. Web Intelligence reporting on top of SSM Data Model

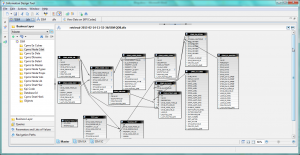

That is a solution you can implement if you have an advanced knowledge of the SSM Data Model. You can implement a UNX Universe with the Information Design Tool by linking all the tables of the Entry and Approval, the Nodes of the Scorecard, the Cube Builder or the Initiatives. The advantages of that option is that you have access to extra information not available in the SSM Cube, such as the Initiatives, the users related to specific KPIs, the attributes of the KPIs etc.

If you are interested in such an option, please, contact us. Keep in mind that it is not a supported option from SAP but we have implemented it many times and we know it works.

3. Crystal Reports on top of SSM

The integration of SAP Crystal Reports with SSM can be done by 4 different ways:

- Implementing a Query as a Web Service from the Universe, built in previous steps: we have managed to implement that scenario

- Connecting Crystal Reports to the Universe on top of the SSM Models we have built in the first step: that scenario is not working although we followed the instructions from SAP

- Using an OLE DB (ADO) connection: this option, not explained by SAP, is available if we install the ODBO provider but we have not managed to make it work

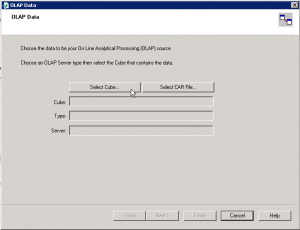

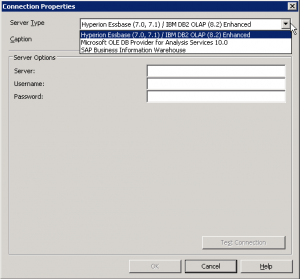

- Using the OLAP Connection: according to SAP Documentation, we can build an OLAP Cube Report in Crystal Reports. We should be able to select the Strategy Management option in following screen to inserts SSM connection data, but we have not been able to find that option

4. Dashboards using Web Services Connections

Using Dashboards, you have two different ways to implement the access to data:

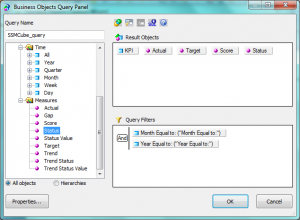

- Implement a Query as a Web Service (QaaWS) to retrieve the relevant information from the Universes we had implemented before.

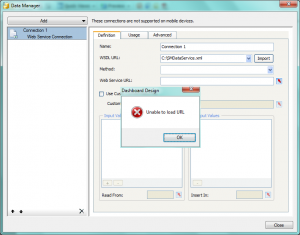

- Use Web Services available in the SAP NetWeaver to retrieve information from the SSM: according SAP documentation you need to download the WSDL file SMDataServiceService and CubeServiceService applications and call the functions within them. With our Dashboards 4.0 SP05 we have not been able to process the WSDL files as the tool is unable to load the URL.

Summary

Providing SAP Strategy Management information to SAP BusinessObjects BI Platform 4.0 can enhance the capabilities of your Strategy system. However that integration is not so easy given the lack of information on that topic and the quality of the existing information. We tried to implement all possible integration scenarios and we have succeeded with Web Intelligence and this is the route we recommend as the scenarios related to Crystal Reports and Dashboards the integration were not working when using SSM 10.0 and BI 4.0.

We will be following-up these issues and let you know if we finally manage to solve them. If you have any suggestions or if you found a workaround to these issues please leave a comment bellow.

Using Google Chrome with SAP BusinessObjects

We all know that there are many internet browsers available, but definitely Google Chrome is one of the most used nowadays and therefore we have had a lot of feedback from our customers related to using chrome with SAP BusinessObjects.

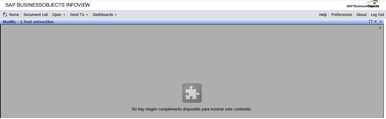

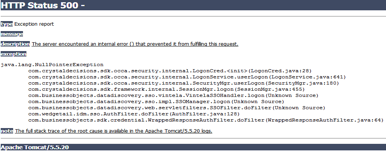

A main problem found by users is that when using Google Chrome on InfoView or BiLaunchPad a missing plug-in error screen appears when you are trying to modify a report and it also shows a HTTP Status 500 error screen when trying to log in to SAP BusinessObjects Explorer. In this blog I will provide a solution these issues.

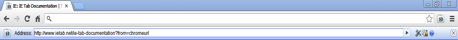

The solution we have found is to use a Google Chrome add-on called “IE tab”, which emulates Internet Explorer on Chrome.

Steps to install it:

- From Google Chrome, introduce the link in the url bar and install the add-on. https://chrome.google.com/webstore/detail/hehijbfgiekmjfkfjpbkbammjbdenadd

- Once installed you will see a small folder with the IExplorer logo on the top right corner of google chrome.

- Click on the folder and another url bar will appear

- Introduce the Infoview / BI LaunchPad link in the new bar and start working with it.

Moreover, in case you need to click on an Open Document link and you want Google Chrome to be opened up automatically with it you will need to add your server’s url so it can open successfully, for this you need to follow these steps:

- Right click on the small folder with the IExplorer logo

- Choose Options

- Add the server’s address text in the Auto URL’s field

The outcome

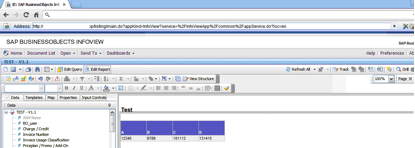

SAP BusinessObjects web based applications can be successfully used with Google Chrome, see below the following examples:

Summary

The main benefits of applying these tips are, with the help of Google Chrome:

- Modify documents on Infoview / BILaunchPad

- Log in and manage spaces in SAP BusinessObjects Explorer

- Increase the speed of navigation while going through the platform folders

According to the SAP’s official PAM (Product Availability Matrix), Google Chrome is not entirely supported due to its fast development speed, so the use and frequent update of this add on is highly recommended.

Hope this will help you have a better experience when working with SAP BusinessObjects. If any doubts or suggestions please leave a comment below.

How to configure SQL Server connectivity for WebI from SAP BusinessObjects BI4.0 in Linux

Nowadays we have noticed some of our customers are following the trend of open source products. Indeed, Linux is a great choice of operating system due to the fact it is totally compatible with SAP BusinessObjects BI 4 and it also help companies to cut costs. However, Linux has retained the way the classical Unix operating system works and therefore everything is about rights and batch commands. Therefore an advanced Linux technical know-how is compulsory before getting into it.

The purpose of this blog entry is to share the issues we faced in one of our customers running SAP BusinessObjects BI4 SP4 in a Red Hat Enterprise Linux Server release 6.3 using MySQL 5.1.61 as the system database and how we solved them.

The issue came out when right after a production database migration (a brand new SQL Server 2008) all their WebI documents stopped running from the SAP BI4 Launchpad with an unusual error "Database Error .[ (IES 10901)" blocking every single WebI to run and the whole core business was jeopardized. Rich Client did not experience any problem in Windows. After the first analysis, we discovered that default SQL Server ODBC driver installation was only configured properly for 32bit connections in the Linux server whereas WebI requires 64bit ODBC driver connectivity for running in the SAP BI4 Launchpad.

When it came to this point we had to apply a couple of OSS notes. The first one was OSS 1607125 "How to configure SQL Server connectivity for WebI from a BI4.0 unix environment". Resolution is:

1. Open env.sh under <install directory>/sap_bobj/setup/

2. Search for the following line

LIBRARYPATH="$LIBDIR:$LIBDIR32:$WCSCOMPONENTDIR:$PLUGINDIST/auth/secEnterprise:${CRPEPATH64}:${CRPEPATH}:${MWHOME}:$PLUGINDIST/desktop/CrystalEnterprise.Report:${BOBJEDIR}enterprise_xi40/$SOFTWAREPATH32/ras:${BOBJEDIR}mysql/lib”

3. Modify the line above by adding the following

":${BOBJEDIR}enterprise_xi40/linux_x64/odbc/lib:${BOBJEDIR}enterprise_xi40/$SOFTWAREPATH32/odbc/lib"

The line should look like this

LIBRARYPATH="$LIBDIR:$LIBDIR32:$WCSCOMPONENTDIR:$PLUGINDIST/auth/secEnterprise:${CRPEPATH64}:${CRPEPATH}:${MWHOME}:$PLUGINDIST/desktop/CrystalEnterprise.Report:${BOBJEDIR}enterprise_xi40/$SOFTWAREPATH32/ras:${BOBJEDIR}mysql/lib:${BOBJEDIR}enterprise_xi40/linux_x64/odbc/lib:${BOBJEDIR}enterprise_xi40/$SOFTWAREPATH32/odbc/lib”

4. Navigate to <install directory>sap_bobjenterprise_Xi40

5. Open odbc.ini file using vi or other text editor tools.

6. Find the entry for Sql Server DSN. The default DSN entry in the odbc.ini is called "[SQL Server Native Wire Protocol]" but it's recommended that you create your own DSN entry using the same parameters specified in the default DSN.

7. Update the "Driver" section of the DSN to point to 64 bit version of SQL Server ODBC drivers

Driver=<install directory>/sap_bobj/enterprise_xi40/linux_x64/odbc/lib/CRsqls24.so

8. Restart the SIA

However the issue was not resolved completely. We received a new error with the following description whenever we tried to run a WebI "Receive the error : Database error: [DataDirect][ODBC lib] System information file not found. Please check the ODBCINI environment variable.. (IES 10901) (WIS 10901)". This is a configuration issue on the Linux operating system with the environment variable ODBCINI. Please make sure your environment variables are set correctly according to OSS note 1291142 - "Web Intelligence reporting using DataDirect drivers in Unix" (as of today it still applies to BI4). Resolution is:

1. In the Bobje user's Unix profile, add/modify the following environment variables and source the profile

BOBJEDIR=<install_path>/bobje export BOBJEDIR ODBC_HOME=$BOBJEDIR/enterprise120/<platform>/odbc export ODBC_HOME ODBCINI=$BOBJEDIR/odbc.ini export ODBCINI LD_LIBRARY_PATH=$BOBJEDIR/enterprise120/<platform>/dataAccess/RDBMS/connectionServer:$ ODBC_HOME/lib:$BOBJEDIR/enterprise120/<platform>/:$LD_LIBRARY_PATH export LD_LIBRARY_PATH

NOTE: For AIX replace LD_LIBRARY_PATH with LIBPATH, For HP-UX use SHLIB_PATH NOTE: Replace <platform> with linux_x86, solaris_sparc, aix_rs6000, hpux_pa-risc depending on your specific Linux platform. NOTE: You must set/export the above env variables in the same order as shown.

Please make sure to use the file $HOME/.odbc.ini as your default source for ODBC settings. Therefore, modify the ODBCINI variable in the following way:

ODBCINI=$HOME/.odbc.ini export ODBCINI

2. Modify the odbc.ini to add the DSN

[TestDSN] Driver=<install_path>/enterprise120/<platform>/odbc/lib/CRmsss23.so Description=DataDirect 5.3 SQLServer Wire Protocol Driver Address=<sql_server host or ip>, <port> Database=<db_name> QuotedId=Yes AnsiNPW=No

NOTE: Your DSN name (TestDSN) must be the same DSN name you used when creating the ODBC connection in Windows

3. DataDirect provides both NON-OEM drivers and OEM drivers

The drivers provided by BI4 are OEM drivers. Basically the WebI is dependent on the ConnectionServer. By default the ConnectionServer is set to use NON-OEM drivers. Thus, we edited the connection server to allow the use of the OEM branded DD driver. The steps are:

- Make a backup copy of $BOBJEDIR/enterprise120/<platform>/dataAccess/RDBMS/connectionServer/odbc/odbc.sbo

- Open odbc.sbo with VI, search for DataDirect, there are 4 entries one for each MSSQL server we support.

- Change all 4 from No to Yes <Parameter Name="Use DataDirect OEM Driver" Platform="Unix">Yes</Parameter>

4. Stop all XI servers

Run ./stopservers, log out completely from your unix shell and log back in (to make sure new environment variables are setup), start all BI4 servers again.

After applying the OSS note we were able to retrieve data from SQL Server 2008 refreshing our WebI documents, however we noticed that CPU was reaching 100% every time we used a WebI in any way. Going through the log files we found errors such as "MS SQL Server 2008 |JobId:61340512 |EXIT SQLGetDiagRec with return code -1 (SQL_ERROR)" .

We took a look at the odbc.ini file and we found out that QWESD entry that was not initially there somehow appeared. As long as we were copying the information from an existing datasource we didn't need it all and we decided to remove the QEWSD=<random string> from the ini file.

Finally double check that <Parameter Name="Use DataDirect OEM Driver" Platform="Unix">Yes</Parameter> located at sqlsrv.sbo file in /opt/bi40/sap_bobj/enterprise_xi40/dataAccess/connectionServer/odbc is set to Yes.

We hope that our experience is a rapid problem solving approach for you. If you have any tips or suggestions to improve this article, please leave a comment below.

Tomcat Upgrade from version 5 to 6 in BOXI 3.x: close the security risk

This post will guide you through the steps on how to successfully make the upgrade of your Tomcat from version 5 to 6 in BOXI 3.x to remove the risk produced by a security hole. The process was done in an environment with Windows Server, SAP BusinessObjects Enterprise XI 3.1 SP3 and Apache Tomcat 5.5. The new Apache Tomcat used was version 6.0.36.

Resolution and steps

All instructions below are using default paths for SAP BusinessObjects and Tomcat 6 installations on a Windows system where the files are put in to “C:Program Files”; you can change replace these folders with your own ones.

1) Download Tomcat 6.0.x service installer (Where the x is the version that you want).

2) This step is only needed if your SAP BusinessObjects installation does not have the Java JDK installed:

2a) Download the JDK 5.0 Update 22.

2b) Install the JDK 5.0 Update 22 package.

3) Run and install the Tomcat 6.0.x executable. The Welcome screen will appear. Click Next.

4) Click "I Agree" on the License Agreement screen.

5) Select the install type from drop down box & click Next.

6) Enter the destination folder where Tomcat 6 is to be installed. Click Next

7) Enter the user name & password for Administrator login & click Next.

8) Enter the below mentioned path that points to JRE supplied with BOE XI 3.1 (or the JDK in “C:Program FilesJavajdk1.5.0_22” that was installed in step 2). Click Install.

9) Uncheck the "Show Readme" check box. Click Finish.

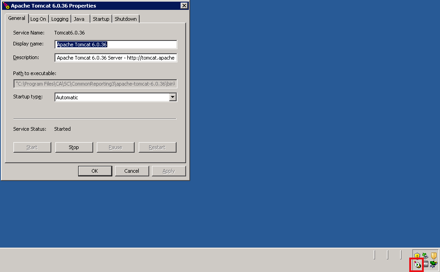

10) Tomcat will now start. There will be a small icon in the system tray as shown below.

11) In case that your system is 64bit you can download the Tomcat 6.0.x 64 bit binaries. You need both the tomcat.exe and tomcat6.exe files.

11a) Stop Tomcat and then overwrite your tomcat.exe and tomcat6.exe files in the directory where you installed Tomcat to “C:Program FilesApache Software FoundationTomcat 6.0bin” after backing up the current files.

11b) Start Tomcat service again.

12) Right click on the icon & click Configure.

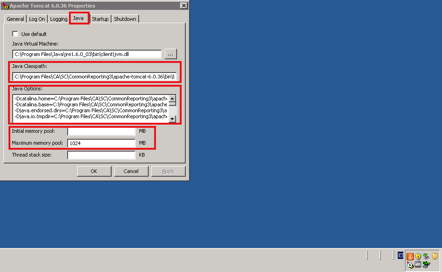

13) The Apache Tomcat Properties screen will appear. Click on Java tab.

14) Add the path "C:Program FilesBusiness Objectsjavasdklibtools.jar" in Java Classpath field after the existing entry separated by a semi-colon (;).

15) Add the value 1024 (the value depends on your RAM) in Minimum and Maximum memory pool field.

16) Add the following values in the Java Options field.

-Dbobj.enterprise.home=C:/Program Files/Business Objects/BusinessObjects Enterprise 12.0/ -Xrs -XX:MaxPermSize=512M -Dbusinessobjects.olap.bin= -Dbusinessobjects.olap.stylesheets=C:/Program Files/Business ObjectsOLAP Intelligence 12.0/stylesheets/ -Djava.awt.headless=true -Daf.configdir=C:/Program Files/Business Objects/Dashboard and Analytics 12.0

17) Click on Apply, OK and restart the Tomcat service.

18) Open the file config.tomcat6 in Notepad. It can be located in "C:Program FilesBusiness Objectsdeployment" folder.

19) Uncomment the variable "as_service_name".

20) Assign the following values to the respective variables:

- as_dir=<installation directory of Tomcat 6>

- as_instance=localhost

- as_service_name=Tomcat6

21) The file should look something like this:

##

## Business Objects Configuration Utility

##

# as_dir: the installation directory of the application server

as_dir=C:Program FilesApache Software FoundationTomcat 6.0

# as_instance: the application server instance to deploy to (represents the name of a folder in the conf/Catalina directory)

as_instance=localhost

# as_service_name: on windows, the name of the tomcat service when tomcat is installed as a service

as_service_name=Tomcat6

# as_service_key: on windows, when tomcat is installed as a service, the name of the key where the java startup parameters are stored

# (there is generally no need to touch this)

as_service_key=HKLMSOFTWAREApache Software FoundationProcrun 2.0${as_service_name}ParametersJava

# as_service_key_value: name of the String value where the java startup parameters are stored, in the key pointed to by as_service_key

# (there is generally no need to touch this)

as_service_key_value=Options

22) Save & close the file.

23) Open the file tomcat6.xml in Notepad. It can be located in "C:Program FilesBusiness Objectsdeployment" folder.

24) Make sure that the file has the correct path to the Tomcat6.0.x executable. This path is the one where Apache Tomcat was installed.

<exec dir="${as_dir}/bin" executable="${as_dir}/bin/Tomcat6.0.36.exe" failonerror="false">

25) Assign the right value to the respective variable in case it does not have it.

26) Save & close the file.

27) In the “C:Program FilesApache Software FoundationTomcat 6.0conf” directory create a folder called Catalina. Within the Catalina folder create another folder called localhost

“C:Program FilesApache Software FoundationTomcat 6.0confCatalinalocalhost”

28) Open Command Prompt (Always as Administrator) by clicking Start, Run, type "cmd" and click OK.

29) Change the deployment directory within the SAP BusinessObjects installation path you installed to ("C:Program FilesBusiness Objectsdeployment").

30) Run the command "wdeploy tomcat6 deployall".

31) A BUILD SUCCESSFUL message will appear once deployment of all WAR files is successful. If not, you need to review the failures and correct as needed.

32) Apache Tomcat 6.0.x is now deployed to and configured for usage with SAP BusinessObjects Enterprise XI 3.1 SP3.

33) You can now use the Windows Services management tool or the Tomcat Configuration tool to set Tomcat to automatically start on system boot if you wish.

With this easy guide for the upgrade you should be all set for your installation. We hope that this helps you make a fast transition for your applications by closing the security risk.

If you have any questions or anything to add to help improve this post, please feel free to leave your comments.

Managing ETL dependencies with BusinessObjects Data Services (Part 1)

Are you satisfied with the way you currently manage the dependencies in your ETL? Dependencies between jobs (or parts of jobs) are an important aspect of the ETL management. It pertains to questions like: Do you want to execute job B if job A failed? Imagine that you have a job C with sub-job 1 (usual runtime: 3 hours) and sub-job 2 (usual runtime: 2 minutes). If sub-job 1 was successful and sub-job 2 failed, can you gracefully restart job C without the sub-job 1 being restarted again?

As soon as you have more than 1 simple job, you have to manage your dependencies. In this article (part 1 of a series of articles about ETL Dependencies Management) I’ll first list some of the characteristics I’m looking for in an ideal dependency management system. I will then have a look at some of the possibilities offered by SAP Data Services 4. In part 2 (my next post), I will propose the architecture of a possible dependency management system. In part 3, I will go into the details of the implementation in Data Services. I’ll finish with part 4 by telling you about how the implementation went, and if some improvements are possible.

The ideal dependency management system

In this post I will use the word “process” to design a series of ETL operations that have a meaning together. Example: extract a source table, create a dimension, or update a fact table. The objective here is to manage the dependencies between the processes: updating a fact table should probably only be allowed if updating the corresponding dimensions was successful.

A dependency management system should ideally have at least the following characteristics:

- Run a process only if its prerequisites ran correctly

- After a failure, offer the option to re-run all the processes or only the processes which failed

- Trace the outcome of each process (ran successfully, failed, did not run)

- Run dependent processes dynamically (rather than statically, i.e. based on date/time)

The possibilities

Let’s enumerate some of the possibilities offered by Data Services, with their respective pros and cons.

1) One job with all processes inside. This is very easy to implement, dynamic in terms of run times, but it doesn’t allow for concurrent runs. Most importantly, it means that failures have to be managed so that the failure of one process does not stop the whole job.

2) One process per job, with jobs scheduled at specific times. This is very easy to implement, allows concurrent runs, but is not dynamic enough. If the process durations increase with the months/years, jobs may overlap.

3) One main job calling other jobs (for example with execution commands or Web Services).

4) One process per job, all the jobs being scheduled at specific times, but checking in a control table if the pre-requisites ran fine. Otherwise they just sleep for some time before checking again.

5) Use the BOE Scheduler to manage jobs based on events (how-to is well described on the SCN). I’ve not tested it yet, but I like this approach.

By default, the first two possibilities only manage the “flow” side of the dependency management (after A, do B). But they do not manage the conditional side of the dependency management (do B only if A was successful). In both cases, a control table updated by SQL scripts would allow the ETL to check if the prerequisite processes have been run correctly.

What I don’t really like in the solutions 2 to 5 is the fact that it’s difficult to have an overview of what’s going on. You cannot really navigate within the whole ETL easily. The solution 1 gives you this overview, but at the cost of having a potentially huge job (without the possibility of processes running concurrently).

Also note that the solutions with multiple jobs will need to manage the initialization of the global variables.

What I miss in all these solutions is an optimal re-start of the ETL. If 10 of my 50 processes failed, and I want to restart these 10 only, do I really have to start them manually?

In my next blog post I’ll propose an architecture that addresses this optimal restart.

Until then, please let me know your thoughts about how you manage your ETL dependencies. Any of the 5 solutions mentioned before? A mix? Something else? And how well does it work for you.