In today’s post, we will narrate you our journey navigating through the ocean of NULLs. This is the story of how we moved forward from the mystical, the initial expectations and assumption, to the practical, an actual problem-solving methodology that became an integral and reusable approach of our data science framework.

Data science projects involve various difficulties which require adept problem-solving capabilities. There are many possible solutions to technical problems, which may be chosen based on certain criteria, some of these being:

Time restrictions.

Budget limitations.

Implementation complexity.

Measurement of effectiveness (the method Clariba uses to measure how efficiently a solution has performed).

A recurring challenge we have seen on our projects implies dealing with NULL values. In data science projects, it is essentially a guarantee that the project team will encounter NULL values in most fields when performing feature engineering or prediction engineering. There are three main factors we consider when dealing with NULLs:

Frequency of the NULLs.

Reasons causing the NULLs.

Accuracy/reliability of our method in order to fix the NULLs.

We designed a plan that took into consideration the aforementioned criteria and factors, and applied it to a business case. The processes and results we are about to describe to you are a testimony of the positive impact that our approach has toward resolving these values. Please note that all values shown in this post are from mock data used to illustrate our methodology.

Predicting whether a customer will be delayed with his payment this month based on his historical behavior

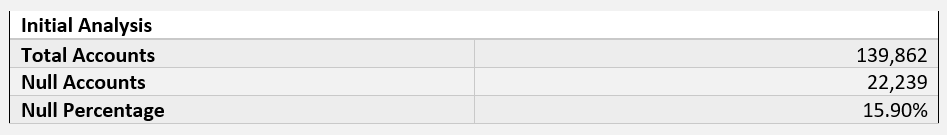

During the feature engineering stage, we decided to include the Nationality feature in order to assess its correlation with user behavior. We conducted a brief NULL analysis that produced the table below:

Improving this feature would be useful in the training phase in our ML modeling stage. As these NULLs are derived from the Nationality feature, it would not be efficient to categorize them within a single group and call it N/A. This would skew the model and it would assume that N/A is a single nationality that holds the same behavior, when in reality it is an amalgam of all other nationalities and more. So how was this resolved?

Firstly, it was important to understand why these NULLs were present in the first place. After some analysis, we discovered it was simply due to bad data entry practices. This allowed us to form the solution we propose hereunder.

Step 1 – Reengineering the feature mappings

We decided on a 2 Step Approach, where the first step involved looking for other features in the same database that could indicate what the NULL nationality could be. We found one that was far more useful than we could have anticipated. That feature was Nationality Code, as we found many cases where a nationality code was present, but the nationality was registered as a NULL.

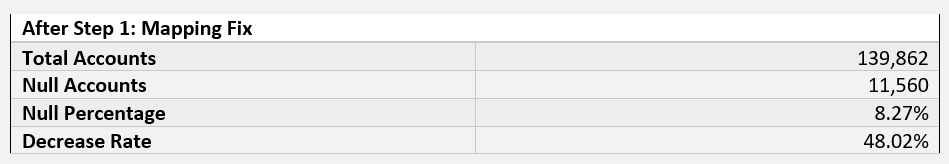

After a significant amount of manual work, we were able to fix the mapping issues caused by the mismatch in the data. Consequently, we were able to arrive at the table below.

As can be observed, we were able to remove almost half the NULLs by fixing the mapping. Due to this approach, we were certain this mapping was correct as it was influenced by the Nationality Code feature.

However, there was more to accomplish. At this stage, there were various methods we could have used to deal with the NULLs. As we were limited for time, we implemented a unique method that was applicable to the current problem, which involved re-applying the distribution of the known sample to the NULLs.

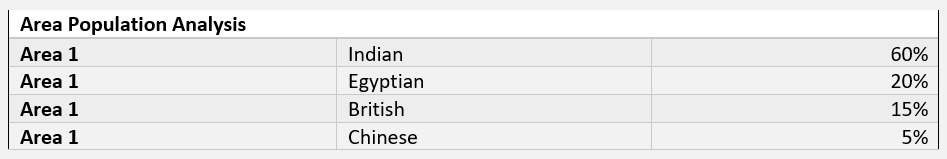

We knew this data contained details of families or individuals living in different areas of the city, and we also knew that areas generally tend to have a distribution skewed to a specific nationality.

In order to implement our method of reapplying the distribution, we proceeded through our 2 Step Approach:

Step 1: We displayed each area and the distribution of the population within it, as seen in the following table:

Step 2 – Redistributing based on the known sample

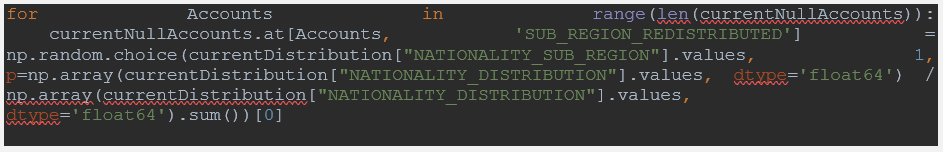

In the second step, we made use of a powerful library in Python called NumPy and went through each account within the areas that possessed a NULL nationality. We selected nationalities based on the distribution using the np.random.choice operator, which takes a list of elements and their probabilities/distributions as its parameter.

Please note that we used this method on areas where we observed more than 100 units of known nationalities and a maximum 20% NULL ratio, in order to ensure that the sample of known nationalities represented the whole population. Naturally, this method is based on an assumption, as there are more accurate methodologies of prediction (Such as KNN). However, these would consume more time, and would take more features into consideration in addition to the area feature.

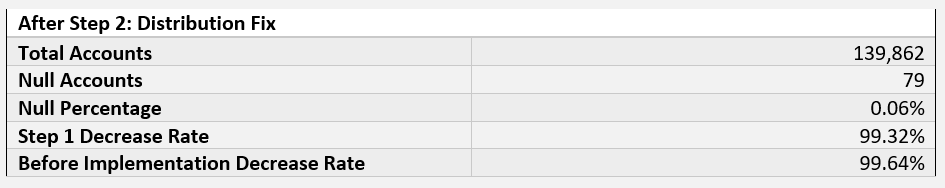

After implementing the distribution method to our NULLs, we achieved the result shown below:

After implementing our 2-step solution, we succeeded in decreasing the NULLs by 99%! We then set a specified N/A value to the remaining 79 accounts, as they would not overwhelm the model when we were training.

Summing it all up

Although not every new idea has a positive outcome when applied for the first time, we were successful during our first and second attempt and validated a sustainable methodology to deal with NULL values. We were able to conclude that data science is not solely about implementing ML models. It also entails providing value to businesses using cutting-edge technology and utilizing effective problem-solving skills.

The mix between machine learning and human perspective allowed us to realize issues such as the NULL problem explained above, arise mainly from a bad flow of data/data entry.

The application of our approach to this case study also enabled us to make proposals to collaborate in order to improve data quality and eliminate the root cause or the presence of any NULL values. The solution could be implemented in different personal information forms and would involve featuring the nationality as a drop-down menu in place of a text box. Therefore, when a data entry officer inserts a new account, he would be required to select the nationality from this list.

Have you ever dealt with NULL values? If so, how did you manage to decrease them? Was our experience useful to you? Let us know in the comments below about your opinions.